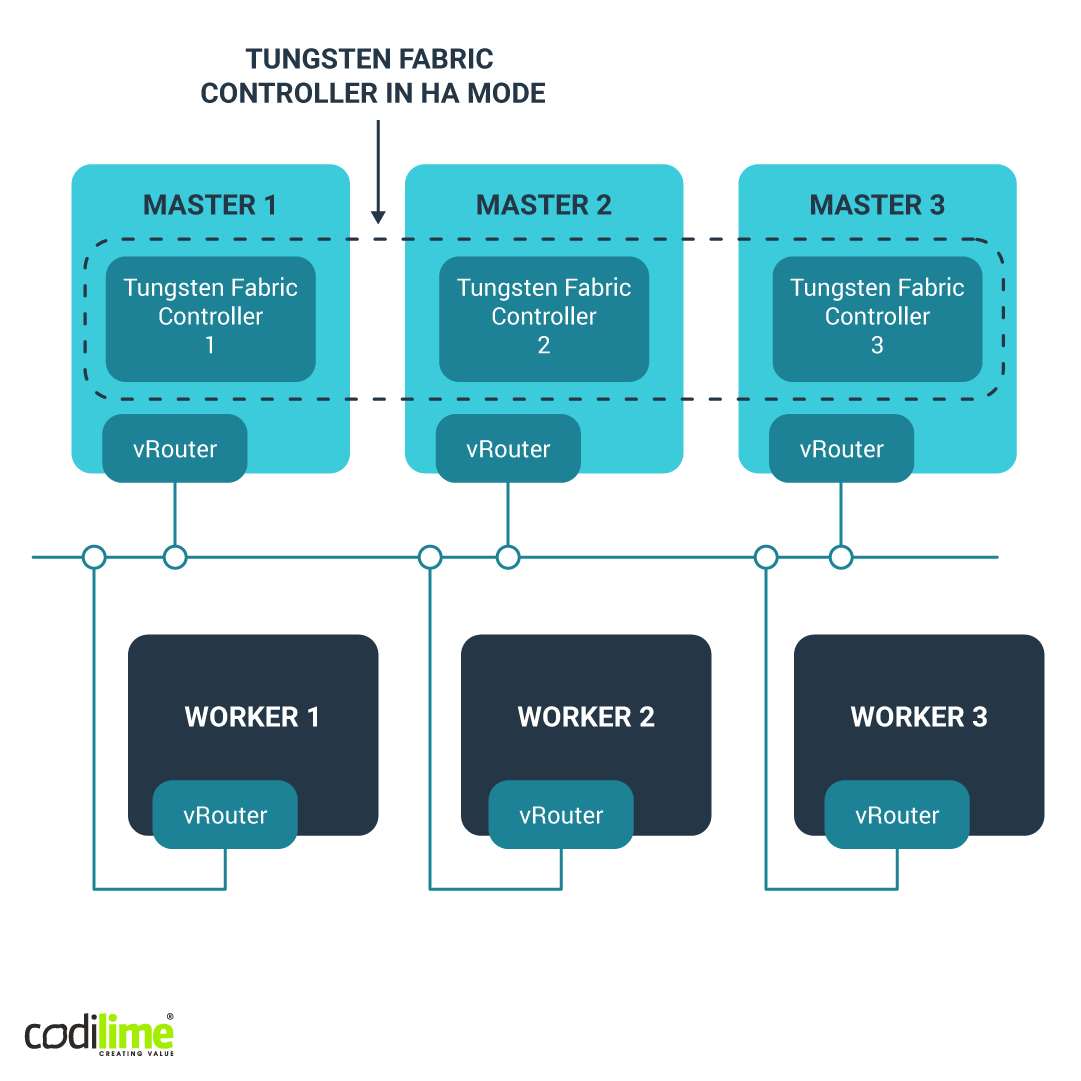

Contrail-operator is a recently released open-source Kubernetes operator that implements Tungsten Fabric as a custom resource. Tungsten Fabric is an open-source Kubernetes-compatible, network virtualization solution for providing connectivity and security for virtual, containerized or bare-metal workloads. An operator needed to be adjusted to the OpenShift 4.x platform, which introduced numerous changes to its architecture compared with previous versions. In this blog post, you’ll read about three interesting use cases and their solutions. All of these solutions are a part of contrail-operator public repository

.

Use case 1: inject kernel in CoreOS with OverlayFS

OpenShift is a container platform designed by Red Hat. Its version 4.x is based on nodes that use CoreOS

, an open-source operating system based on the Linux kernel. CoreOS has been designed specifically to allow changes in the system only when booting it for the first time. These changes are introduced using ignition configs—JSON files containing, for example, names of services, files or users to be created. When the OS is up and running, most of its settings can be seen in read-only mode and users are not allowed to modify system settings.

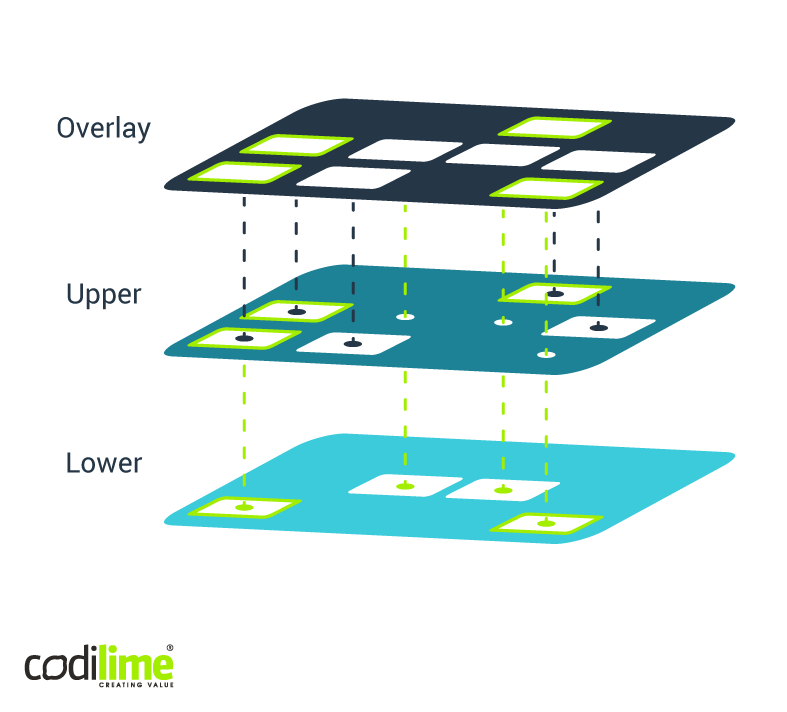

The setup is presented in Figure 1:

In Tungsten Fabric, vRouter is injected into the system as a kernel module. In the contrail-operator (and also in the tf-ansible-deployer , effectively the operator’s predecessor) this is done by launching a container that injects this module into the system. With OpenShift, this task is handled by daemonSet, which launches a pod on every node. In such a pod, one of the initContainers (i.e. containers launched to perform a given operation only once and then shut down, thus allowing the proper containers to be launched) injects the kernel module into the system. Yet given the characteristics of the CoreOS, this operation cannot be performed because a container will inject a read-only kernel module to /lib/modules.

Enter the solution to this challenge: overlayFS, which virtually merges the two directories:/lib/modules (read only) and /opt/modules (writable). Ignition config is now created, which will set OverlayFS /lib/modules with /opt/modules directories. The latter was accessible and it was possible to inject a kernel module there (see Figure 2). Such a solution did not make any difference from the perspective of the Tungsten Fabric Controller. Hence, it was not necessary to change anything in TF itself.

Ignition config looks like this:

apiVersion: machineconfiguration.openshift.io/v1

kind: MachineConfig

metadata:

labels:

machineconfiguration.openshift.io/role: master

name: 02-master-modules

spec:

config:

ignition:

version: 2.2.0

storage:

directories:

- filesystem: "root"

path: "/opt/modules"

mode: 0755

- filesystem: "root"

path: "/opt/modules.wd"

mode: 0755

files:

- filesystem: "root"

path: "/etc/fstab"

mode: 0644

contents:

source: "data:,overlay%20/lib/modules%20overlay%20lowerdir=/lib/modules,upperdir=/opt/modules,workdir=/opt/modules.wd%200%200"

Source: GitHub

Ignition config creates two directories: /opt/modules, to inject modules, and /opt/modules.wd, a working directory. Next, in the /etc/fstab, the mount is defined:

overlay /lib/modules overlay lowerdir=/lib/modules,upperdir=/opt/modules,workdir=/opt/modules.wd

Interestingly, it is not a typical ignition config for CoreOS, but a custom resource from an OpenShift cluster—MachineConfig. It performs the same functions as ignition config but is also visible as a cluster resource and allows you to edit the config when the cluster is running. In this way, you can apply changes to the CoreOS node even after first boot, which is not usually supported by standard CoreOS-based deployments. This is a feature specific to OpenShift.

Here, you can read more about our environment services.

Use case 2: set nftables rules of CoreOS with ignition config

CoreOS uses nftables, a newer framework for packet management than iptables. With a normal system like RHEL8, this is still an iptables command-line tool but in its backend it uses nftables. The iptables syntax is converted into respective nftables commands in the backend, so you can still use classic iptables commands and nftables will be still properly configured. Of course, in the CoreOS there is no such tool as iptables, as nobody assumes that the rules for packet handling will be changed when the system is up and running.

It is true that in one of the initContainers located in a vRouter configuration pod, an iptables tool is used to carry out several operations. But the container is based on RHEL7 which in turn uses iptables backend. It is worth noting that a CLI iptables tool can support backend with iptables or nftables, though this depends on the system’s backend in which it was compiled.

To check what backend is used by iptables (CLI), just write the following command: iptables --version. If (nftables) is the reply, the tool supports nftables backend. If there is no such reply, it means that the tool supports iptables backend.

Meanwhile, a container had a version without nftables, so it was impossible to establish rules using a container. Ignition configs helped solve this challenge. During the system boot, rules can be established using a native iptables tool:

apiVersion: machineconfiguration.openshift.io/v1

kind: MachineConfig

metadata:

labels:

machineconfiguration.openshift.io/role: master

name: 10-master-iptables

spec:

config:

ignition:

version: 2.2.0

systemd:

units:

- name: iptables-contrail.service

enabled: true

contents: |

[Unit]

Description=Inserts iptables rules required by Contrail

After=syslog.target

AssertPathExists=/etc/contrail/iptables_script.sh

[Service]

Type=oneshot

RemainAfterExit=yes

ExecStart=/etc/contrail/iptables_script.sh

StandardOutput=syslog

StandardError=syslog

[Install]

WantedBy=basic.target

storage:

files:

- filesystem: root

path: /blog/etc/contrail/iptables_script.sh

mode: 0744

user:

name: root

contents:

# 'data:,' and URL encoded openshift-install/sources/iptables_script.sh

source: data:...,

The full version of the code can be found on GitHub .

In this config a service is created and run as a oneshot script during the system boot. The script is then created on the path: /etc/contrail/iptables_script.sh. The full version of the script is available in the GitHub repository . Generally speaking, these are simple iptables commands setting up the resources needed to run Tungsten Fabric.

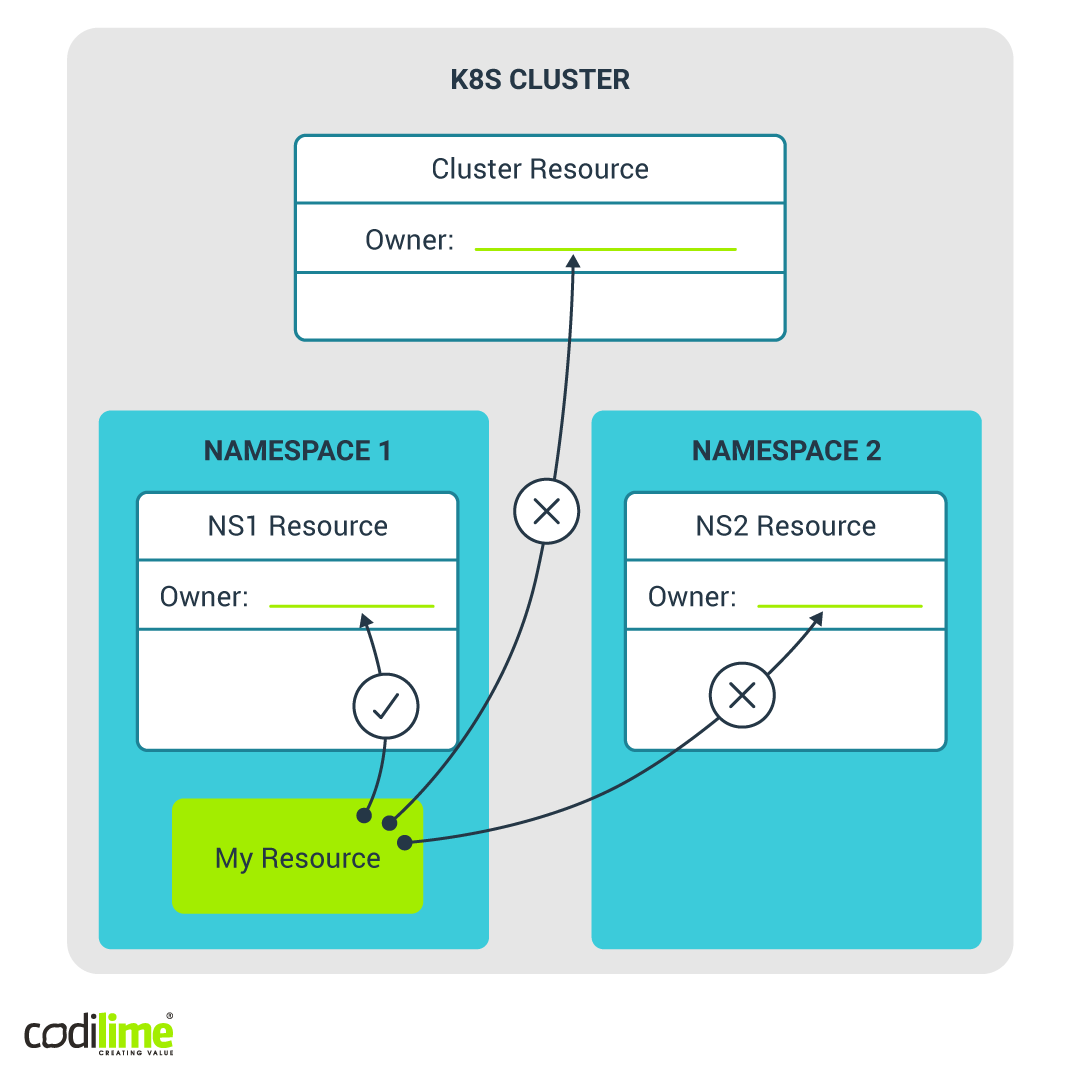

Use case 3: why namespaced owner orphans cluster resource

The last use case concerns the implementation of Kubernetes. During the tests one of the child resources was constantly being deleted, for no apparent reason. An investigation revealed the source of the problem: Owner reference is set for resources like Persistent Volume and Storage Class. According to the Kubernetes documentation :

Cross-namespace owner references are disallowed by design. This means that namespace-scoped dependents can only specify owners in the same namespace, and owners that are cluster-scoped. Cluster-scoped dependents can only specify cluster-scoped owners, but not namespace-scoped owners.

So owner reference for Persistent Volume and Storage Class (both cluster-wide resources) was set for a namespaced resource. That was why the garbage collector in Kubernetes kept deleting the entire component. Garbage collector saw that the cluster-scoped resource had set the owner and tried to find it only in cluster-scoped resources. However, the owner was hidden in the namespace. As a result, the garbage collector recognized the resource as orphaned and deleted it in order to keep the cluster clean.