Network orchestration is the automated coordination and management of network resources, configurations, and services to ensure optimal performance, scalability, and security. It uses centralized controllers, AI-driven decision-making, and policy-based automation to dynamically configure and adapt network infrastructure in response to changing demands. By integrating technologies such as SDN (Software-Defined Networking), AI agents, and automation frameworks like Ansible or Terraform, network orchestration reduces manual intervention, minimizes errors, and enhances operational efficiency. You can explore the distinctions between network automation and orchestration in our article Network automation vs. orchestration.

Network orchestration is challenging because it requires handling complex, diverse network environments, integrating systems from multiple vendors, ensuring real-time adaptability, and scaling with network growth. Additionally, ensuring security, error handling, and integrating with existing systems adds to the complexity, making the orchestration process both technically and operationally challenging.

The rapid progress in Generative AI (GenAI), particularly in Large Language Models (LLMs), has revolutionized how network operators and engineers want to interact with a diverse ecosystem of network orchestration technologies, frameworks, and automation tools. Traditionally, network automation required deep technical expertise, extensive scripting, and manual execution of predefined workflows using Ansible, Terraform, Netmiko, SDN controllers, or vendor-specific APIs. However, the capabilities of GenAI are shifting this paradigm, enabling natural language-driven automation, GenAI-assisted decision-making, and dynamic orchestration of complex network tasks. All of this is made possible through the user-friendly interfaces offered by various types of chatbots, copilots, and AI assistants.

However, nothing happens on its own, and preparing mature, reliable tools of this kind requires careful planning, flexible frameworks, analysis of existing scripts, and procedures, combined with the advanced knowledge of experts. The key is to integrate all these elements into a cohesive system that can be gradually expanded with new functionalities. This article focuses on leading frameworks like LangChain and LangGraph that could be very helpful if you want to implement a Gen-AI orchestration system.

Evolution of network operators’ needs

Network operators and engineers are increasingly recognizing the advanced capabilities of LLMs in understanding complex technical contexts. Faced with the growing complexity and responsibility of orchestration tasks, they are beginning to see LLMs as a foundational component in the development of next-generation orchestration tools. These capabilities are not only streamlining workflows but also reshaping their expectations for AI-driven solutions. Among these expectations is the seamless integration of LLM-powered orchestration with existing tools, scripts, and policies. Operators seek natural language interactions that eliminate the need to memorize complex syntax and navigate command-line differences across multi-vendor environments. This can significantly lower the barrier for less experienced engineers. They favor a declarative, intent-based approach for specifying outcomes over manual, imperative configurations. Additionally, they expect clear, actionable recommendations, accompanied by detailed explanations and the ability to review and approve changes before execution. This can ensure both transparency and control.

Instead of manually searching for scripts or writing YAML playbooks, engineers want to say: “fix congestion on site A”, “diagnose packet drops between site A and B and suggest fixes”, “apply latest security policies on firewalls on site B”, etc. And they expect GenAI-powered orchestration tools to process the request and convert it into simpler steps. This would require querying the topology database, analyzing current configurations, states, logs, and telemetry data of relevant devices and then clearly presenting executable solutions and asking for final approval before actions are taken.

Integrating existing scripts & frameworks with AI agents

Most network teams already use scripts, playbooks, and automation workflows, but these require manual execution and precise input parameters. They put a lot of knowledge and effort into developing and testing them. GenAI can act as an intelligent assistant that interprets an engineer’s requests, retrieves the right scripts, fills in missing parameters, and explains what will happen before execution. Engineers can review and confirm the proposed actions. Thus, AI can work with existing scripts instead of replacing them, ensuring engineers stay in control.

Here, we want to emphasize that designing and thoroughly testing scripts to cover an expanding range of functionalities will be a critical aspect in the development of GenAI-based automation and orchestration systems. While this foundational work still needs to be done manually, GenAI can assist by enabling engineers to apply these scripts effectively and in the right context. Moreover, GenAI copilots can also play a valuable role in helping create such scripts and playbooks in the first place.

AI as a decision-support system for network changes

Network operators want both execution and intelligent contextual suggestions for: what actions are possible, what risks are involved, and what the expected impact is. For instance, when an engineer wants to upgrade routing settings to reduce convergence time, AI should not only collect and analyze current settings and propose optimized settings but also explain potential risks, and rollback options, ensuring the engineer understands what will happen.

GenAI-driven network troubleshooting

In the traditional approach, engineers manually gather logs, analyze errors, search documentation, and implement fixes. GenAI can automatically identify issues, analyze patterns, and suggest solutions in plain language. For instance, when an engineer asks: “Why is there a high packet loss on link A?”, the AI agent can check telemetry data, interface errors, and historical logs and answer “Link A is experiencing 14% packet loss due to CRC errors. Suggested actions: change fiber module or xFP.”

AI-powered policy-based network orchestration

Engineers want to enforce high-level policies, not configure individual devices. GenAI enables intent-based networking, where users describe what they want, and AI translates it into low-level configurations. For instance, when an engineer provides the intention: "Ensure all public cloud instances have restricted SSH access.", an AI-powered orchestrator should scan current firewall policies, identify misconfigured rules, and suggest automated fixes before applying changes. Instead of manually writing security policies, operators simply state the goal, and AI enforces it. For more information on intent-based approaches, you can read our articles: Source of Truth vs. Source of Intent in network automation and A brief intro to the Network Automation Journey.

Need for flexible frameworks

Considering the new needs of operators, it is evident that there is a strong demand for flexible frameworks streamlining the development of GenAI tools that can handle a wide range of both simple and complex network automation and orchestration tasks. These frameworks should fully leverage the capabilities of LLMs and support the intelligent, real-time retrieval of network data from devices and various network support systems. They should also enable efficient use of internal knowledge bases, or even external sources when internal knowledge falls short in completing certain tasks. Moreover, these frameworks must support the modeling of various specialized AI agents. Some agents may execute simple workflows. Others may handle more complex, multi-step processes. In such cases, steps may depend on one another, where one can only start after the previous has been completed. Some steps may run in parallel, while others must follow a strict order. There may also be conflicting actions, such as multiple steps attempting to modify the same parameter on the same device. The system must be able to detect and resolve such conflicts. For critical steps, confirmation by experienced staff may be required before proceeding.

Many frameworks are available for multi-agent orchestration architectures (crewAI , Autogen

, FlowiseAI

), but here we will focus on LangChain

and LangGraph

. These open-source solutions stand out as the most mature and widely adopted, yet they continue to evolve dynamically through their vibrant communities, maintaining their strong position in the market. They address the key concepts, components, and their interrelations, which arise from real-world needs and challenges encountered when incorporating LLM capabilities into the development of practical applications across various industries and use cases.

LLMs

Large Language Models are advanced AI systems trained on vast amounts of text data to understand, generate, and interpret human language with high contextual accuracy. In network orchestration, LLMs empower tools to process natural language commands, analyze complex network data, and drive automated decision-making for tasks like troubleshooting, configuration changes, and performance optimization. LLMs are integral to GenAI-assisted tools, enabling them to perform a wide range of tasks when properly prompted. These models enhance network automation and orchestration workflows by parsing and interpreting natural language queries, classifying issues (e.g., performance, security, configuration, monitoring), generating step-by-step action plans, extracting parameters for function calls, and interpreting network data. They can also analyze vendor documentation, make decisions based on contextual data, summarize incident reports, and explain risk factors, all of which contribute to more efficient and effective network management. For even more information about LLMs, check out our more detailed article What is a large language model? LLM explained.

LangChain

LangChain is a comprehensive framework designed to streamline the development of applications powered by LLMs. It offers a suite of components that can be orchestrated to build sophisticated AI-driven systems. Below, we provide the key worth-knowing concepts for creating a GenAI-driven assistant tailored for network orchestration.

-

LLM wrappers

LLM wrappers in LangChain provide a user-friendly interface for interacting with various LLMs from different vendors. By abstracting the complexities of these models, wrappers streamline the integration process, allowing for easy use of multiple AI models in building assistant tools. Additionally, they facilitate the seamless replacement of older models with more powerful or cost-effective versions as they become available.

-

Prompt templates

Prompt templates are predefined structures that specify how user inputs, parameters, and data should be formatted into prompts for the language model. They ensure consistency in input formatting, helping guide the model to produce relevant and coherent outputs. In network orchestration, prompt templates can standardize commands or queries by incorporating specific placeholders for data augmentation, which ensures that the AI assistant accurately interprets and processes the input, leading to more reliable results.

-

Chains

Chains in LangChain are sequences of modular components linked together to perform complex tasks. Each component processes its input and passes the output to the next, enabling the construction of intricate workflows. For a GenAI assistant in network orchestration, chains can automate sequences such as analyzing network configurations, identifying issues, and suggesting optimizations.

-

Document retrievals and Retrieval Augmented Generation (RAG)

Document retrieval involves fetching relevant documents or data in response to a query. Retrieval Augmented Generation (RAG) enhances this by integrating the retrieved information into the prompt, allowing the language model to generate responses grounded in up-to-date and specific data. This is particularly useful in network orchestration, where the assistant can access the latest network policies, logs, or best practices to provide informed recommendations.

-

Tools and tool calling

Tools are functions that the AI assistant can invoke to perform specific actions, such as executing network commands or accessing external APIs. Tool calling refers to the AI's ability to decide when and how to use these tools based on the context of the workflow. In network orchestration, this enables the assistant to execute commands, retrieve data, or modify configurations dynamically - also efficiently utilizing existing scripts and playbooks.

-

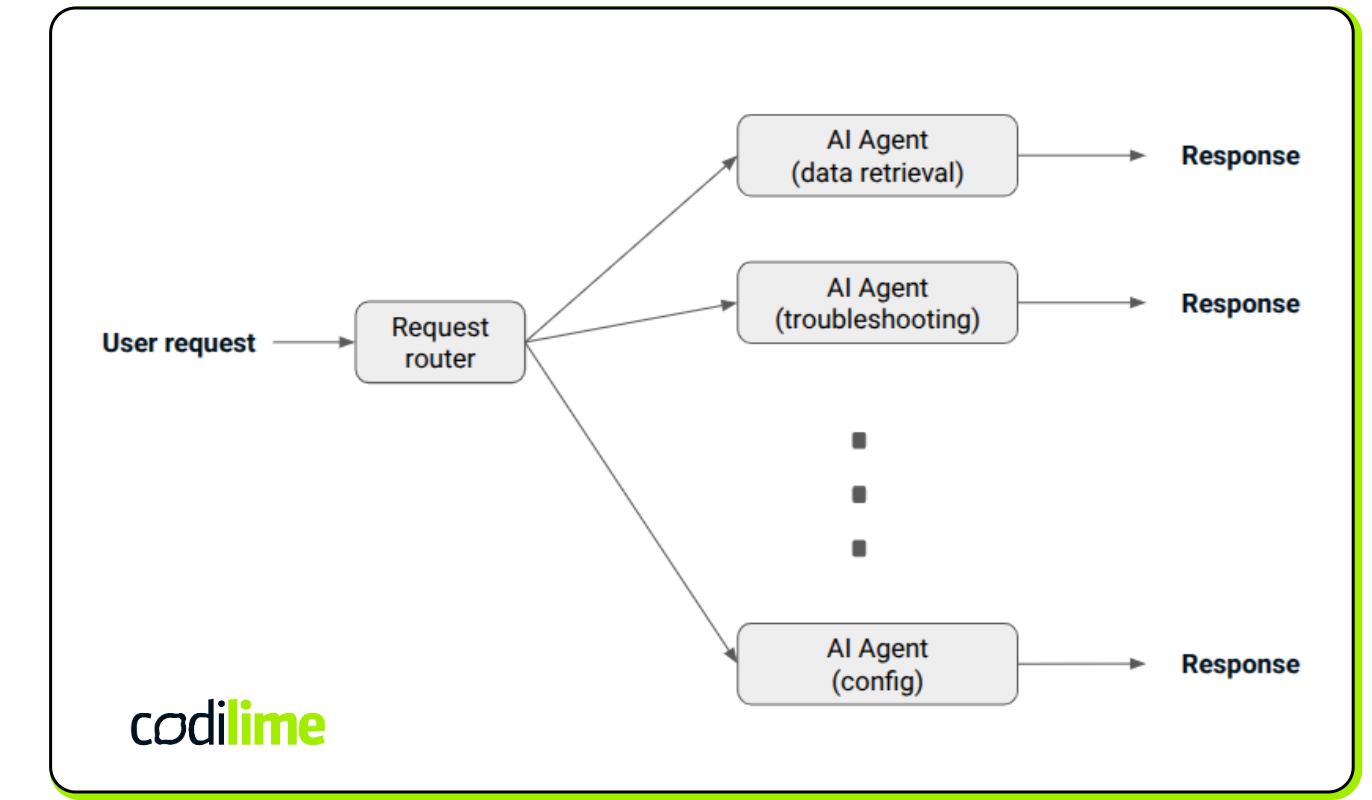

Request routing

Request routing involves directing user inputs to the appropriate components or tools within the system. Effective request routing ensures that each query is handled by the most suitable module, enhancing the efficiency and accuracy of the assistant. In a network orchestration scenario, this could mean routing configuration queries to a specific parser or directing troubleshooting requests to diagnostic tools.

-

AI-Agents

Agents in LangChain are autonomous entities that can make decisions about which actions to take, including which tools to use, based on the inputs they receive. They are capable of interacting with external resources and can adapt their behavior according to the context. For a GenAI assistant in network orchestration, agents can autonomously execute network diagnostics, apply configurations, or escalate issues as needed.

-

Memory

Memory in AI systems refers to the ability to store and recall conversational history, which is essential for maintaining context over time. In network orchestration, memory allows the system to remember previous commands, configurations, and interactions, ensuring continuity in multi-step processes. This capability enables more effective decision-making and avoids redundant tasks, as the system can reference past actions to adjust or optimize future ones.

-

Output Parsers

Output parsers are responsible for transforming the raw output from the language model into structured formats suitable for downstream processes. They ensure that the AI's responses are in a predictable and usable format. In network orchestration, output parsers can convert the AI's textual suggestions into actionable commands or configuration files.

-

Typical workflow structures

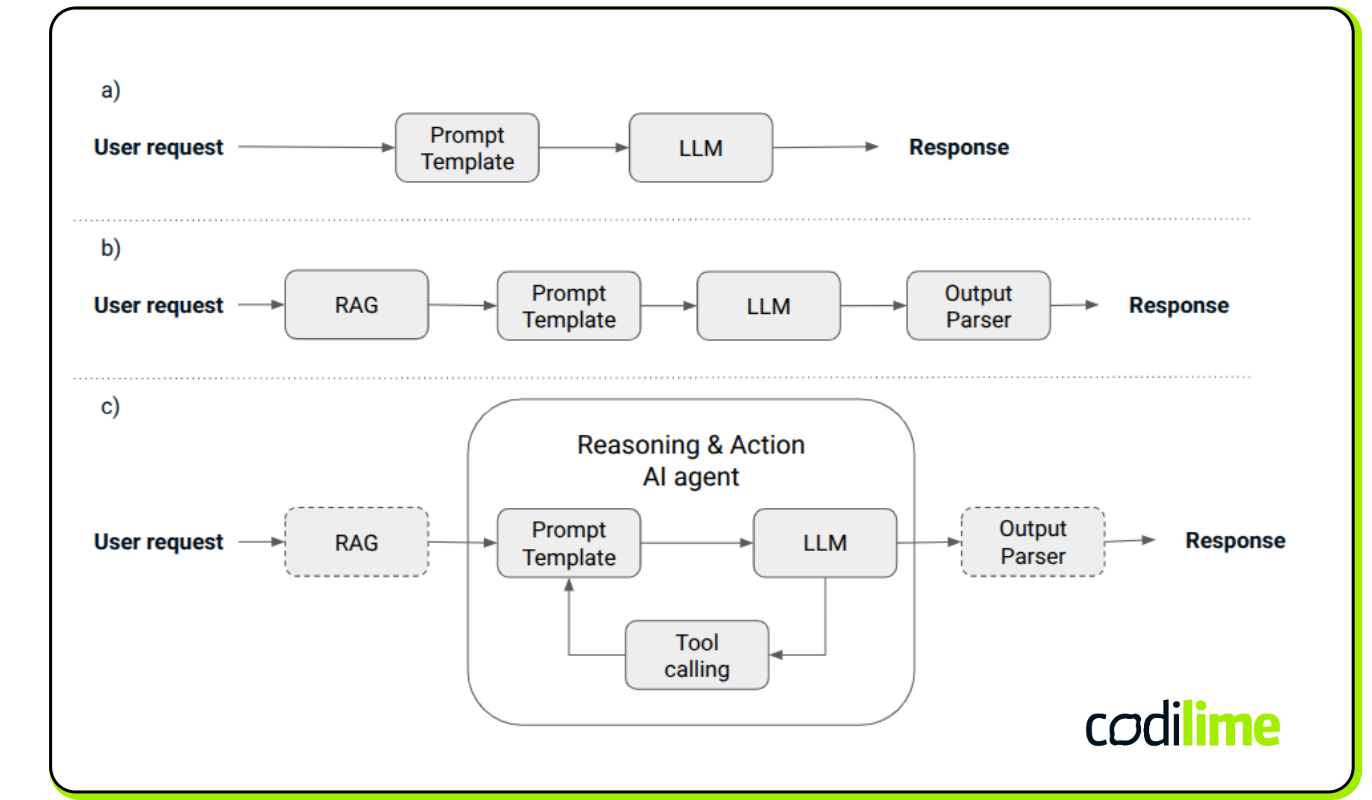

- In Figure 1, we illustrate typical workflows supported by LangChain. Figure 1.a demonstrates a standard chain for querying an LLM, where the input prompt includes the user’s query along with specific instructions guiding the LLM on the expected form of the answer. For example, this can be used when asking, “What is the procedure to configure VLAN on a specific switch model?”

- Figure 1.b shows a more advanced workflow, where the LLM query is supplemented with the RAG technique, allowing the LLM to interpret and integrate relevant documents from an internal knowledge base. In this case, when asking the same question, “What is the procedure to configure VLAN on a specific switch model?”, the LLM will analyze internal data and deliver the procedure accordingly. Additionally, the output may need to be formatted for further processing, which is why an output parser is included at the end of the chain.

- Figure 1.c schematically represents a ReAct agent, a unique construct capable of determining which tools to select and execute in response to user queries. This looped process iteratively extends the prompt and directs it to the LLM, with each tool selected and executed in sequence. While it is a powerful construct that can produce highly accurate results, its effectiveness may diminish if too many tools are involved, which we will explore further later.

LangChain also supports a simple orchestration scenario where multiple specialized agents are involved, and there is a need to route requests to the appropriate agent (Figure 2). The request router component can be implemented in many ways, for instance using simple code that leverages semantic search to map incoming requests to the most suitable agent based on their specialization, or by preparing specific prompts and allowing the LLM to make the decision.

LangChain is insufficient for GenAI-driven network orchestration

While LangChain offers a robust framework for building LLM applications, it may have limitations when applied to the specific demands of network orchestration. LangChain primarily focuses on linear or simple branching workflows, which might not suffice for the intricate, conditional processes typical for network orchestration. Also, state management capabilities may not support the persistent, long-term state tracking required for ongoing network operations. LangChain does not inherently support (except simple request routing) the orchestration of multiple agents with specialized roles working in tandem. Modeling workflows to mitigate potential hallucinations produced by LLMs can be challenging when using LangChain. This is a critical concern when building high-performance systems. The LangChain does not directly support reflection mechanisms for self-assessment of generated outputs through specially designed prompts.

For instance, while LangChain’s widely used ReAct agent excels in dynamic reasoning and decision-making, it struggles when overloaded with too many tools in complex, multi-step workflows like network orchestration. The lack of structured execution, insufficiently flexible long-term context management, and inefficient stopping mechanisms lead to excessive tool usage, context loss, and incorrect answers. That is why LangGraph extends the LangChain framework by enabling more complex, structured, and controlled execution of orchestration tasks, ensuring greater precision and efficiency. Also, reflection mechanisms for reducing LLM hallucinations can be integrated with tools like LangGraph. To simplify, it could be said that LangChain might be sufficient for network automation tasks, whereas LangChain combined with LangGraph would be more suitable for network orchestration processes.

Summary

In this article, we explored how GenAI, particularly Large Language Models (LLMs), enhanced network automation and orchestration tasks. We examined how advancements in LLMs reshaped the expectations of network engineers, especially in large and complex network environments. We highlighted the need for flexible frameworks to streamline the development of intricate workflows and multi-agent systems for network orchestration, greatly enhanced by the capabilities of LLMs. We introduced LangChain and its core concepts, demonstrating how advanced systems could be designed and developed. However, we also explained why LangChain alone might not have been sufficient to handle the complexity of network orchestration tasks, recommending the combined use of LangChain and LangGraph.

In Part 2 of this article, we take a deeper look at LangGraph, exploring its key concepts and explaining why it is essential for developing advanced workflows and multi-agent systems in network orchestration. Additionally, we delve into the challenges and business benefits of building GenAI-powered tools to support network automation and orchestration.

![Thumbnail of an article about [interview] The role of machine learning in modern network architecture and operations](/_next/image/?url=%2Fimg%2Fthe-role-of-machine-learning-in-modern-network-architecture-and-operations-thumbnail.jpg&w=3840&q=75)