Introduction

In Part 1, we discussed how GenAI, particularly Large Language Models (LLMs), can improve network automation and orchestration tasks. We looked at how the progress of LLMs has shifted the expectations of network engineers, particularly in managing large and complex network infrastructures.

We emphasized the importance of adaptable frameworks that simplify the creation of complex workflows and multi-agent systems for network orchestration, significantly empowered by LLM capabilities. We introduced LangChain and its foundational concepts, illustrating how sophisticated systems can be built and developed. However, we also pointed out that LangChain alone might not be adequate for handling the complexity of network orchestration, suggesting that a combination of LangChain and LangGraph would be more effective.

In this article, we will examine LangGraph, highlighting its core principles and illustrating its importance in creating sophisticated workflows and multi-agent systems for network orchestration. We will also explore the obstacles and potential business advantages of developing GenAI-driven tools to enhance network automation and orchestration.

LangGraph

LangGraph is an important extension to LangChain and is an advanced framework designed to orchestrate complex, multi-step workflows with persistent state management, making it useful for building dynamic and context-aware AI applications. In the context of a GenAI-driven network orchestration assistant, LangGraph enables the coordination of multiple specialized agents, real-time decision-making, and adaptive control flows to automate network monitoring, configuration management, and fault resolution. By leveraging its structured graph-based approach, LangGraph can ensure precise execution of network tasks, integrate human-in-the-loop supervision, and enhance transparency in AI-driven network operations. Below, we provide the key concepts worth knowing about LangGraph, useful for creating a GenAI-driven assistant focused on network orchestration.

Graph-based workflow representation

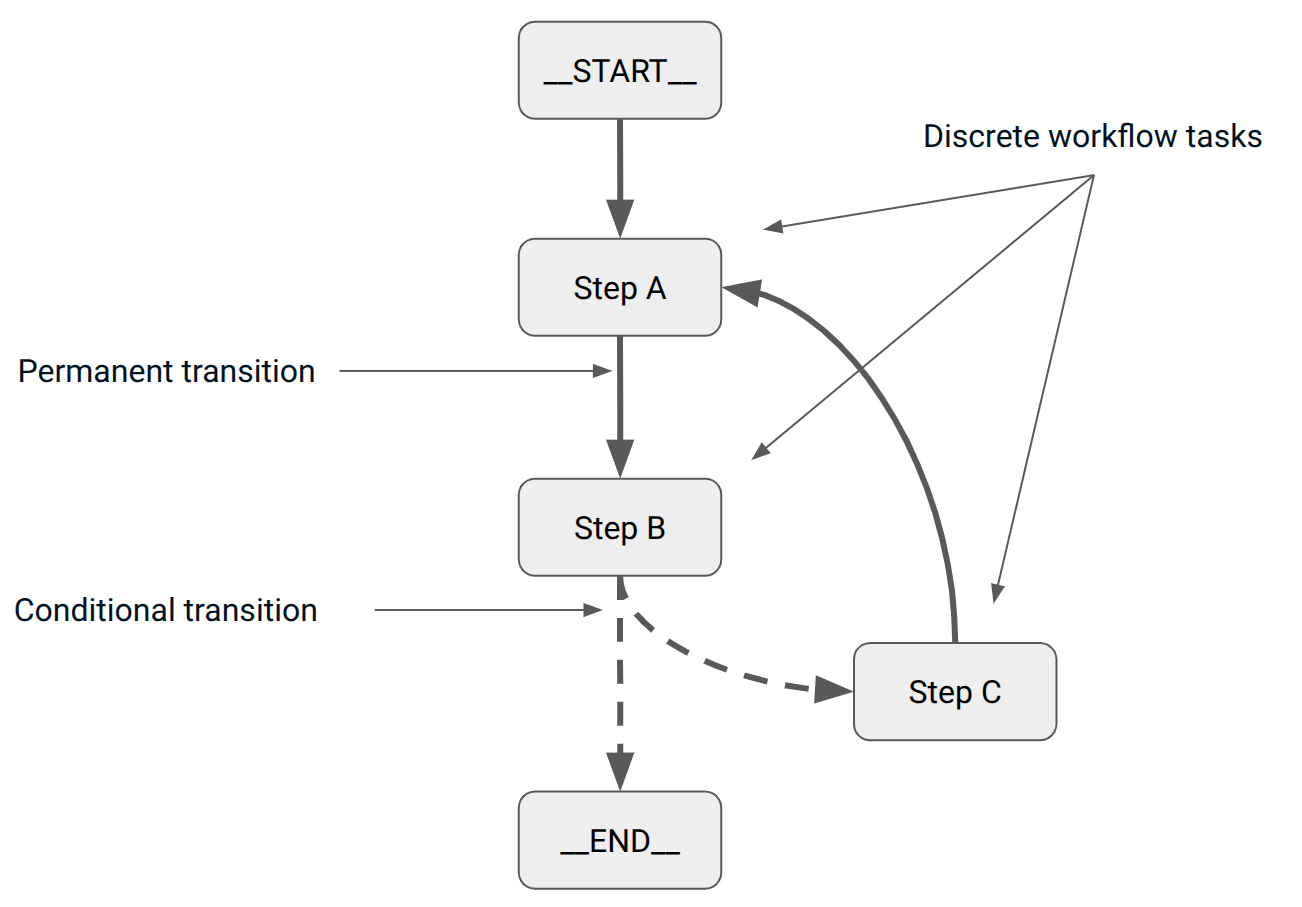

LangGraph’s graph-based workflow representation models tasks as a directed graph, where each node represents a discrete step in the orchestration process, such as executing an automation script, querying network telemetry, or requesting human approval (Figure 1). Nodes can leverage various LangChain components, including LLM calls, prompt templates, chains, tools, document retrievals, agents, and custom Python functions, allowing for a modular and flexible design. Edges define the flow in the form of permanent and conditional transitions between these tasks, where workflow execution dynamically follows different paths based on real-time data, AI-generated or user decisions, or predefined business rules, enabling adaptive and context-aware orchestration.

State management

State management in LangGraph enables the preservation and dynamic update of state across multiple steps in the orchestration workflow, ensuring that all relevant contextual information, such as network metrics, configuration parameters, or approval statuses, remains intact and available for consecutive workflow steps. This feature is crucial for maintaining continuity in long-running tasks and enables intelligent decision-making based on real-time data. Additionally, state can be preserved for scenarios that require delayed assessment of configuration actions, allowing for a thorough evaluation of their effects before proceeding with further steps.

Conditional branching and dynamic routing

LangGraph enables conditional branching and dynamic routing within orchestration workflows, allowing the process to adjust based on real-time network conditions. For instance, if a network configuration change is requested, the graph may include a conditional branch that directs the process to an approval subgraph for validation before proceeding with the change. Additionally, depending on the type of network issue detected, such as performance degradation, security breaches, or congestion, the system can dynamically route the workflow to the appropriate troubleshooting or remediation path, ensuring a tailored and efficient response to varying network challenges.

Human in the loop

LangGraph facilitates network orchestration workflows while supporting a human-in-the-loop (HITL) approach for critical decision points. This ensures human verification and approval is incorporated before making high-impact changes, such as reconfiguring devices or adjusting security policies, and allows for human supervision when AI models detect high-risk events with low confidence. HITL is also essential for verifying AI-generated recommendations, ensuring compliance with regulations, and refining decisions based on business priorities or specific domain knowledge.

Multi-agent collaboration

Multi-agent collaboration in LangGraph enables the orchestration of various agents, each designed to handle a specific network function, such as traffic optimization, security enforcement, or fault detection. These agents can work autonomously on their respective tasks while LangGraph coordinates their interactions to ensure a seamless, multi-step orchestration process. By leveraging this collaborative approach, networks can dynamically adapt to changing conditions, address multiple operational needs in parallel, and ensure that the overall network performance is optimized and secure.

Modularity and reusability

Modularity and reusability are key benefits of LangGraph in network orchestration. By structuring tasks as modular nodes, LangGraph enables the reuse of existing automation scripts or tools, integrating them seamlessly into dynamic workflows. Furthermore, repetitive sequences of actions can be enclosed in subgraphs, allowing these subgraphs to be used as single workflow nodes in more advanced scenarios, thus improving efficiency and reducing redundancy. This approach ensures that complex workflows can be easily managed, extended, and adapted to meet the evolving needs of the network.

Integration with external tools and APIs

Integration with external tools and APIs is another key feature of the LangGraph framework, facilitating seamless communication between AI-driven systems and existing network management solutions. By connecting to tools like Ansible, Terraform, or network monitoring APIs, LangGraph enables AI models to trigger real-world actions based on real-time insights, ensuring that network orchestration is not only automated but also responsive to network changes. This versatility enables LangGraph to seamlessly integrate external systems in various ways, ranging from simple tool or API calls within a workflow node to complex, multi-tool agents that autonomously determine which tool to use based on the context.

Reflection and reflexion mechanisms

Reflection and reflexion mechanisms are also key features of LangGraph, enabling advanced adaptability in network orchestration. The reflection mechanism allows agents to perform self-assessment, enabling them to evaluate the outcomes of their previous actions and adjust their strategies accordingly based on real-time feedback or changing network conditions. For example, when using RAG to fetch documents, the retrieved data, such as default parameters, can be verified to ensure they align with best practice guidelines, reducing errors or outdated information. Additionally, the reflexion mechanism helps mitigate LLM hallucinations by refining the model's outputs through external validation, where the effects of actions can be verified and adjusted, ensuring more accurate and effective network decisions.

Event-driven execution

Event-driven execution enables workflows to be automatically triggered by specific network events or conditions, allowing AI-assisted tools to respond swiftly to anomalies or performance issues in near real time. Unlike traditional systems, where actions are manually initiated by engineers, these workflows are designed to be activated by alerting systems, reducing response times and minimizing human intervention. While this automation streamlines the process and enhances network reliability, it does not exclude interactions with engineers. The system can inform them about the situation and request approval for corrective actions, ensuring that human supervision is maintained where necessary for critical decisions.

Example of an advanced workflow

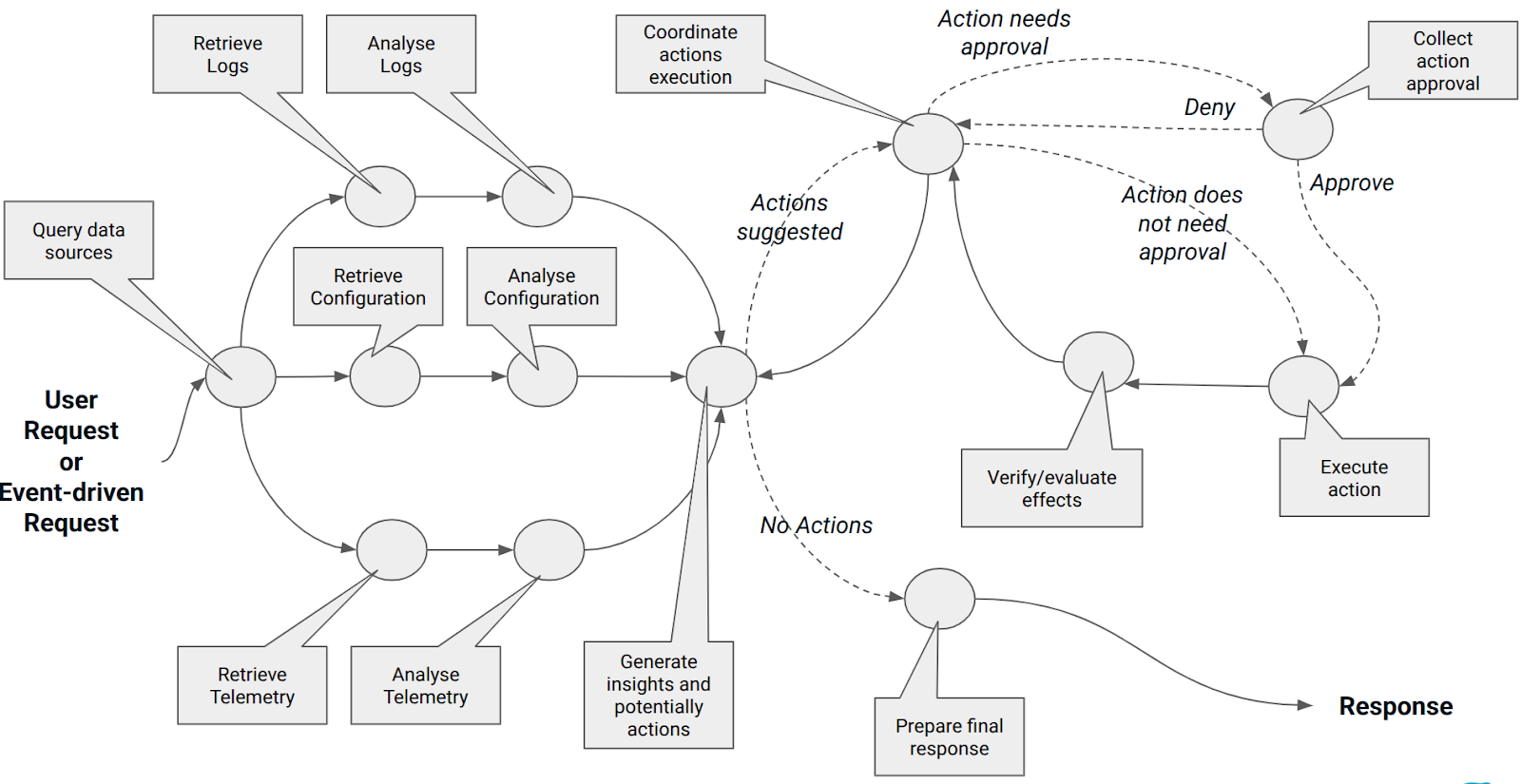

In Figure 2, we illustrate an example of an advanced workflow designed for troubleshooting and resolving issues through suggested actions. The workflow begins by interpreting a user query or an event-driven request, which is related to checking for potential issues, and then distributes the task into parallel branches. These branches query various network data sources, including logs, telemetry, and configuration, to gather relevant data, which is then analyzed. Once the analysis is complete, the results are combined to generate insights, which may also include recommended remediation actions if any issues are detected. If actions are proposed, the workflow conditionally (dotted edge) proceeds to a node responsible for coordinating the execution of these actions. This node checks whether any actions require human approval (from a network operator). If it is approved or if approval is not necessary, the action is directed to a node that selects the appropriate tool for execution. After the action is carried out, the subsequent node evaluates its effect and provides feedback to the coordination node (reflection mechanism). Once all actions are completed, the workflow moves to a final node that either provides additional insights or prepares the final response to the user. It is important to note that the context of the workflow execution, which includes updates introduced by previous nodes, is shared across and updated by all subsequent nodes in the workflow execution graph.

Multi-agent architecture patterns

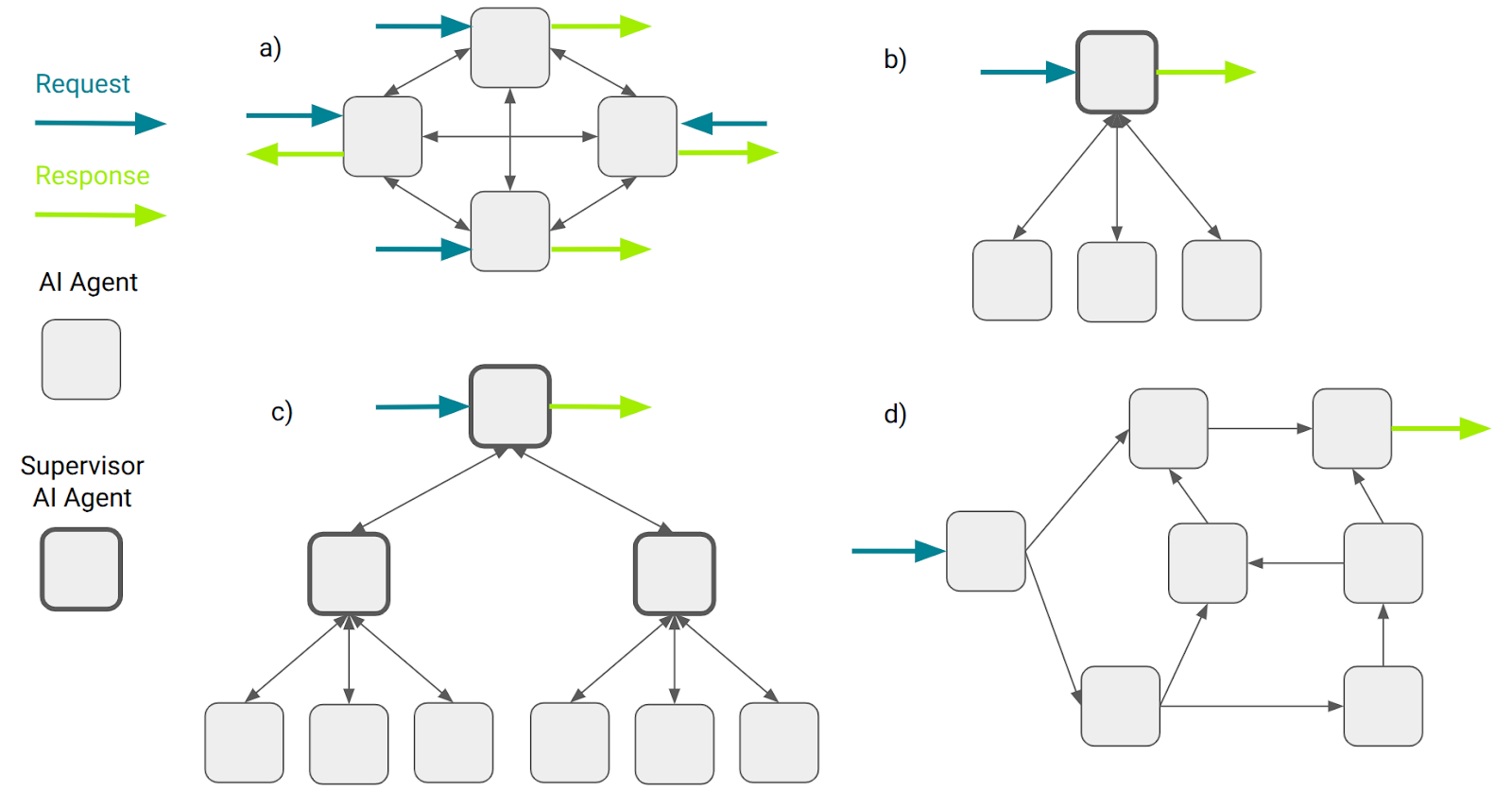

The graph-based workflow representation discussed earlier uses nodes to symbolize various logic elements that implement specific steps within the workflow, without detailing the software components within each node. However, the LangGraph framework is versatile and is often employed to model the architecture of multi-agent systems. While the definition of an AI-agent may vary slightly among practitioners, the most general definition is that "an AI-agent is a system that uses AI to control the flow of an application." This broad definition allows an AI-agent to represent anything from a simple chain or subgraph (with deterministic steps) to a complex, dynamic ReAct agent. Consequently, LangGraph is commonly used to represent workflow graphs, where each node corresponds to a single AI agent, and the overall structure demonstrates how these agents communicate with each other. Typical architectural patterns in multi-agent systems are illustrated in Figure 3.

The Network pattern (Figure 3.a) is a structural model where every agent can communicate with every other agent, and any agent can decide which other agent to call next. In this pattern, a request can be directed to any agent and processed through various paths of agents. Each agent has the ability to either provide the final response or forward the request, along with the workflow execution context, to the next agent for further processing.

The Supervisor pattern (Figure 3.b) is a structural model in which each agent communicates with a single supervisor agent, responsible for making decisions on which agent to call next or providing the final response. This pattern is commonly used in advanced tool-calling scenarios, where tools are grouped within AI-agents to model agent specialization.

The Hierarchical Supervisor pattern (Figure 3.c) is a structural model that builds upon the supervisor model by incorporating a supervisor of supervisors. This extension enables more complex control flows and allows for greater specialization of agents, particularly in the tools they manage. Typically, the top-level supervisor coordinates the responses from lower-level agents and determines the final answer to the request.

The Custom Multi-Agent Workflow (Figure 3.d) is a pattern in which each agent communicates with only a specific subset of other agents. In this structure, certain parts of the flow are deterministic, and only designated agents have the authority to decide which other agents to call next. Typically, this pattern features a starting agent that receives the initial request and an end agent that generates the final response.

Multi-agent architectures facilitate the decomposition of complex workflows, streamlining the development process in three key areas: modularity, specialization, and control. Modularity enables the separation of agents, making it easier to develop, test, and maintain agent-based systems. Specializing AI agents by domain enables focused expertise and efficient resource utilization, activating only relevant agents in context-specific scenarios. Control provides explicit management of agent communication, offering more direct control over system interactions. While the LangGraph framework is particularly well-suited for multi-agent systems, it is also versatile enough to model a broader range of systems and workflows.

Business benefits

Developing GenAI-powered assistants using flexible frameworks for network orchestration can bring substantial business benefits, significantly transforming network management, efficiency, and overall operational performance. Many studies, including:

- How generative AI can boost highly skilled workers’ productivity

- The Impact of AI on Developer Productivity: Evidence from GitHub Copilot

- AI Improves Employee Productivity by 66%

suggest that AI-assisted tools boost the productivity of software developers and engineers by ~40% for experienced professionals and up to ~126% for less experienced ones. While definitions of 'increased productivity' vary, it's widely acknowledged that LLM-powered assistants and copilots significantly aid in producing valuable code more efficiently. However, they remain imperfect, especially for complex projects and development tasks. Even though we can anticipate comparable improvements among engineers dealing with network orchestration. By automating repetitive tasks, reducing manual configurations, and assisting with troubleshooting, AI-driven assistants enable network engineers to focus on high-value strategic tasks rather than spending time on routine maintenance and diagnostics.

Beyond productivity gains, issue resolution times can be significantly reduced, as AI-powered assistants can instantly analyze logs, detect anomalies, and suggest corrective actions before engineers even begin manual investigation. Faster adaptation to changing network conditions is another crucial advantage, as AI can dynamically optimize configurations, balance traffic, and predict potential failures in real time, ensuring that networks remain resilient and responsive. This level of automation minimizes downtime and service disruptions, directly improving business continuity and reducing costs associated with outages and emergency interventions.

A better network user experience is a direct result of AI-assisted orchestration, as networks become more adaptive and capable of self-healing, leading to higher performance, fewer connectivity issues, and more stable service delivery. AI-driven assistants also enhance security posture by continuously monitoring traffic patterns, identifying anomalies, and responding to potential threats faster than human operators alone. Furthermore, cost optimization is achieved not only through increased efficiency but also by reducing over-provisioning, optimizing resource allocation, and automating compliance with industry regulations.

Lastly, GenAI-powered assistants democratize network management, enabling less experienced engineers to maintain high-performance networks. This approach reduces reliance on senior specialists, whose scarcity in the current job market has become a significant challenge. By combining automation, predictive analytics, and real-time decision-making, AI-driven network orchestration ultimately leads to a more agile, resilient, and cost-effective network infrastructure, ensuring long-term competitive advantages in an increasingly digital world.

Table 1. The benefits and limitations of traditional network management versus AI-assisted network orchestration

| Aspect | Traditional Network Management | AI-assisted Network Orchestration |

|---|---|---|

| Productivity | Limited by manual effort and expertise | Enhances productivity for both experienced and less experienced engineers |

| Suitability for experience levels | Requires highly experienced specialists for complex tasks | Democratizes management, enabling less experienced engineers to maintain networks |

| Task automation | Manual configurations and repetitive tasks consume time | Automates repetitive tasks and reduces manual configurations |

| Issue resolution time | Longer due to manual investigation | Significantly reduces issue resolution time via instant log analysis and anomaly detection |

| Adaptability to network changes | Slower adaptation to changes | Enables faster adaptation with dynamic optimization and predictive failure detection |

| Network performance & user experience | Performance and stability depend heavily on manual tuning | Improves network user experience with adaptive, self-healing capabilities |

| Security | Relies on human operators for threat detection and response | Enhances security with continuous monitoring and faster threat response |

| Cost optimization | Higher costs due to over-provisioning and manual resource allocation | Optimizes costs by reducing over-provisioning and automating compliance |

| Dependence on senior specialists | High reliance on scarce senior specialists | Reduces reliance on senior specialists addressing current market scarcity |

| Overall network agility & resilience | Less agile and resilient due to slower decision-making | Leads to more agile, resilient, and cost-effective infrastructure |

Challenges

However, developing and deploying GenAI-assisted tools for network orchestration presents several significant challenges that must be carefully addressed to ensure reliable, efficient, and secure operations. One of the most pressing concerns is handling concurrent and contradictory network modifications when multiple users interact with AI-assisted tools simultaneously. If engineers initiate conflicting changes in parallel, it can lead to unstable network behavior, service disruptions, or security vulnerabilities. Implementing conflict resolution mechanisms, such as state-aware AI decision-making, transaction locking, or a human approval step for critical changes, is essential to prevent unintended modifications.

Another challenge is the proper decomposition of the AI system into specialized agents, reusable workflow subgraphs, and structured decision-making components. Network orchestration involves multiple tasks—monitoring, configuration, security enforcement, and troubleshooting—requiring careful design of which tasks should be automated, which should be modularized, and where AI or human intervention should take place. Balancing autonomous decision-making with human oversight is critical to ensure reliability, particularly for high-risk network changes that could impact service availability.

Additionally, integrating existing automation scripts, playbooks, and tools into the GenAI-driven system is crucial for leveraging past investments in automation and orchestration. AI should not replace existing well-tested automation but rather enhance it by providing intelligent execution, decision support, and contextual adaptability. Ensuring compatibility with existing frameworks like Ansible, Terraform, or vendor-specific automation APIs reduces the need for redundant tool development and speeds up deployment.

A gradual deployment strategy is necessary to avoid disrupting existing network operations. Organizations should start with low-risk, high-impact tasks, such as AI-driven monitoring and alerting, before expanding into more complex areas like autonomous configuration changes or self-healing networks. Later phases can introduce fully automated decision-making, proactive security measures, and predictive scaling once trust in AI’s capabilities is established.

Comprehensive testing of workflows is another critical challenge, as AI-assisted orchestration must be validated under real-world conditions before being deployed in production. Testing should include simulations, sandbox environments, and controlled rollouts to ensure that the AI correctly interprets network states, triggers the right workflows, and avoids unintended changes.

The financial benefits of AI-assisted network orchestration will not be immediately realized after investment, as organizations must first go through development, integration, training, and adaptation phases. ROI will become apparent over time as efficiency improvements, faster issue resolution, and reduced operational costs accumulate. However, companies need to carefully plan their AI investments and measure performance gains against initial deployment costs.

Lastly, many organizations will need to invest in additional infrastructure, including self-hosted LLMs, to address security concerns related to sensitive network data and compliance requirements. Relying on cloud-based AI models can introduce data privacy risks, especially in industries with strict regulations. Additionally, engineers must learn how to work with AI-driven orchestration, requiring training programs and a clear distribution of responsibilities to ensure that humans and AI work together effectively without over-relying on automation.

Table 2. The primary challenges associated with implementing AI-assisted network orchestration.

| Challenge | Description |

|---|---|

| Concurrent modifications | Managing simultaneous and potentially conflicting network changes by multiple users interacting with AI tools. |

| Agent specialization | Decomposing AI systems into specialized agents and workflows for tasks like monitoring, configuration, and troubleshooting. |

| Integration with existing tools | Ensuring compatibility and leveraging existing automation scripts and tools within the AI-driven orchestration framework. |

| Gradual deployment strategy | Implementing AI features incrementally, starting with low-risk tasks to build trust and avoid disruptions. |

| Comprehensive workflow testing | Validating AI-assisted orchestration through simulations and controlled rollouts to ensure reliability in real-world conditions. |

| Delayed ROI realization | Recognizing that financial benefits from AI integration may take time, requiring careful planning and performance measurement. |

| Infrastructure and training needs | Investing in infrastructure (e.g., self-hosted LLMs) and training programs to address security concerns and ensure effective human-AI collaboration. |

Summary

GenAI-based multi-agent network orchestration leverages AI-driven decision-making and automation frameworks to simplify complex network management tasks, reducing manual intervention and improving operational efficiency. By integrating technologies like SDN, LLM-powered assistants, and existing automation tools, engineers can interact with networks through natural language, enabling AI to translate high-level intents into actionable configurations and optimizations. However, challenges such as preventing conflicting network modifications, designing effective AI-agent workflows, integrating legacy automation, and ensuring security compliance must be addressed to achieve reliable deployment. Gradual adoption, comprehensive testing, and strategic investment are necessary to realize financial benefits, as AI-driven orchestration requires upfront infrastructure and training efforts. Despite these challenges, AI-assisted tools are expected to significantly enhance productivity, minimize infrastructure downtime, optimize costs, and ultimately provide more resilient and adaptable network operations.

The race to develop increasingly advanced LLMs is intensifying, with a parallel drive to uncover how to effectively harness their potential across diverse industries. To address emerging demands and maintain a competitive edge, it is crucial to pursue bold, strategic development of multi-agent systems that can fully leverage the capabilities of these models. Choosing the right frameworks is a key element of this journey.