Kubernetes is an open-source system for container orchestration enabling automated application deployment, scaling and management. Read this two-part blog post to understand the business perspective on Kubernetes. I will present a brief story of virtualization methods, the key concepts on which Kubernetes is built and how it can help your business when it comes to running containerized applications. The second part covers six main reasons to adopt Kubernetes.

First, let’s take a look at the market data on the adoption of Kubernetes. According to a survey conducted by the Cloud Native Computing Foundation, Kubernetes is the leader among container management tools. In the survey, 83% of respondents use Kubernetes (data for July 2018, up from 77% in December 2017), while 58% use it in production and 42% are still evaluating it. The majority of survey respondents were enterprise companies (5000+ employees) and 40% of them are running Kubernetes in production.

According to the 2019 RightScale State of the Cloud Report , the use of Kubernetes is skyrocketing. Its overall adoption among all companies increased from 27% in 2018 to 48% in 2019, and even 60% among large enterprises (1,000+ employees). The Top 10 Trends for 2019

report predicts that cloud native approaches (including containers and Kubernetes) to application development is set to gain even more ground, as many businesses are planning to adapt this approach. The company 451 Research estimates

that the market for containers will grow from $762 million in 2016 to $2.7 billion by 2020.

Given the technical jargon and nomenclatures that have floated in and out of the IT ecosystem over the past few decades, it is often overwhelming to grasp precisely what new technology brings to the table from an implementation and performance point of view. So, before diving into exactly why you should consider deploying Kubernetes, I’m going to help you understand what this tech is about, and how it works.

The old approach to virtualization

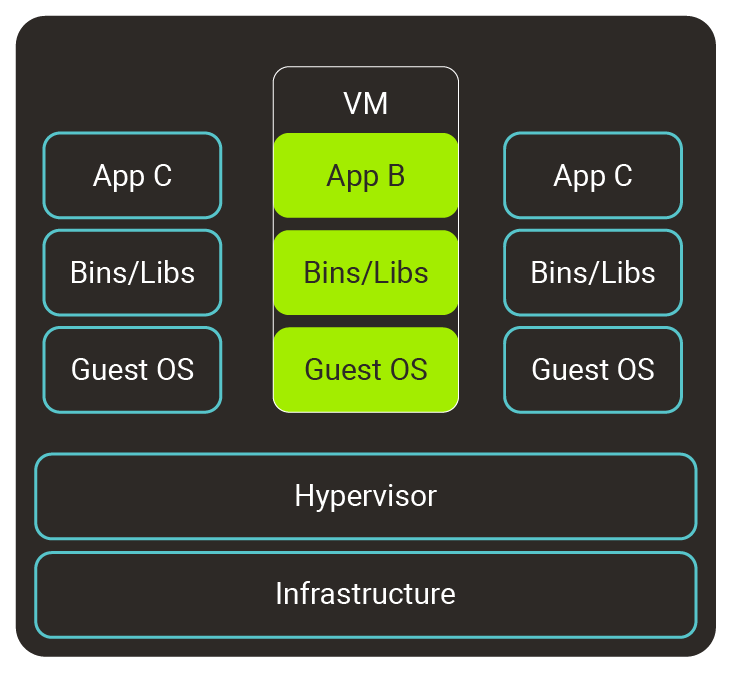

In the very early days the only method to use more computing power consisted in adding more hardware in a physical sense (hence the old designation “server farms”). Then, Virtual Machines (VMs) were introduced and the concept of virtualization was born. This very concept has been playing a growing role over the past almost 20 years. Such software introduced a virtual Hardware Abstraction Layer (HAL), which in turn made it possible to run multiple Operating Systems (OSs) and applications on the same underlying hardware of the parent OS. While such an approach does have its advantages within the current IT infrastructure landscape, especially where specific complex software goals are concerned, and does, as well, maintain a stronghold on market share, it is not free of shortfalls.

A fully-fledged implementation of VMs (Virtualization 2.0 for the sake of comparison) can quickly become a system resource hog, thus creating the need for faster, and therefore more expensive, hardware. This is especially true in hybrid setups, where multiple and competing OSs are required on the same machine due to the array and nature of the apps they must run (not all apps are always available on the same operating system). As a result, VMM (Virtual Machine Monitor or hypervisor) software becomes necessary, making the system more complex. VM implementations are heavyweight and inflexible once setup is completed. After all, in order to run apps, each VM must include not only the full footprint of the OS itself, but all libraries and dependencies (Libs/Bins) for the entire stack (operating system, device drivers, apps etc.). Each VM must also emulate virtual versions of the underlying hardware, thus further hampering performance by not calling an IRQ (Interrupt Request) directly, but instead via a software agent. Combine this in a real-world cloud scenario for a large or stringent business goal-demanding organisation, and all this ultimately translates into implementation churn, software complexity, limited portability, wasted CPU cycles and GBs, and distinctively more dollars for both hardware and labor from an IT management perspective. Virtualization is neither cheap nor easy to implement.

The new(er) approach to virtualization

Enter, for lack of a better term, virtualization 3.0, the promised land of containerization. Software containers are not a totally new solution, as the concept has been with us for around two decades. However, in 2004-2008, Solaris introduced Solaris Zones and created standalone application environments while Google burst upon the scene with their process containers. Linux, meanwhile, released kernel compilation 2.6.24, which would later mature into LXC (Linux Containers). Fast forward to 2013 and beyond: virtualization in the form of containers, as opposed to just VMs, is gaining traction, and for good reason. The Kubernetes project was originally designed and created by Google, which open-sourced it in 2014. It released version 1.0 in 2015 and partnered with the Linux Foundation to form the Cloud Native Computing Foundation

, which now maintains Kubernetes.

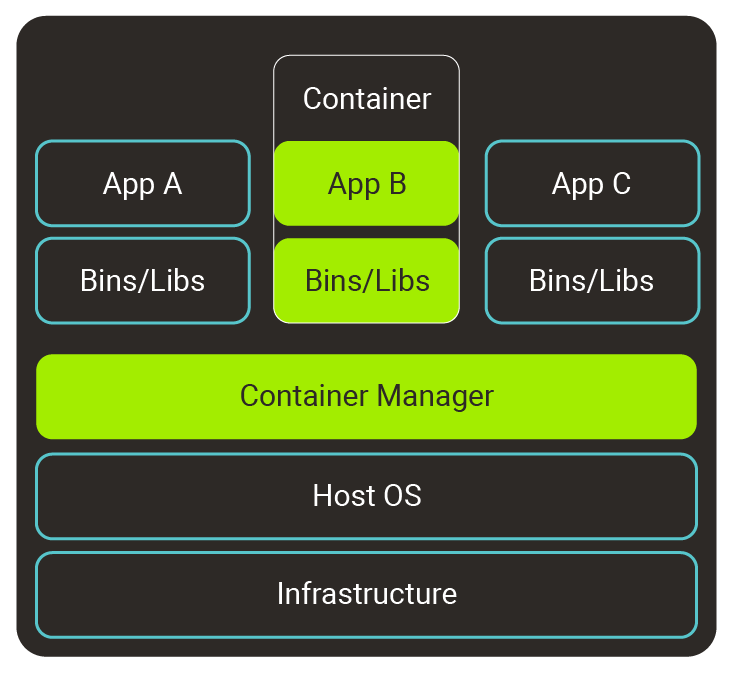

Containers are to VMs what ballet dancers are to sumo wrestlers, and you’ll soon see why. If your organisation requires a very specific form of virtualization implementation, which may be the case for any number of reasons (i.e. running a complex software environment), then VMs are probably still your ticket to ride. For everyone else, containers are, at least initially, the best option. The key difference is that containers all run on the same host OS, thereby removing the need to replicate OSs as in the case of each VM. Further, containers utilize Libs and Bins of the host OS through direct API calls, and only encapsulate a select few additional Libs and Bins within their local module, which are specific to the apps they run. For these reasons, containers are equally lightweight and portable, and far less resource-hungry than any VM you may run.

Those saved CPU cycles and GBs can be used in two different scenarios: run the same set of container apps with higher system performance and response times, or make for better utilization of underlying hardware by running more container apps. Should you need to re-launch a container app following a software glitch, or security update, then a few seconds is all you need – a far cry from booting a VM in the same scenario, let alone a Hybrid VM configuration. Container-type virtualization of course comes with a handful--arguably not so extreme--drawbacks of its own.

First, containers are intended to be lightweight, portable, and easy to manage. If your business relies on complex apps, however, multiple containers will need to be run. This will add to the multiplicity, and eventually to performance bottlenecks as the containers fight for protected access to system resources (both the operating system and hardware). Second, containers are by default not as secure as VMs. That’s because they provide security at the process level, not at the level of the OS, for which there is only one OS running, that of the host (any security vulnerability of the host OS automatically becomes a threat to containers). Additional software is justified where security is paramount. Third, because it makes perfect sense to run an entire collection of containers on any one physical system and optimize the delivery of a comprehensive SaaS (Software as a Service) solution, managing them in real time is no longer a manual task. In fact, it requires software similar to a VMM, such as is needed in managing multiple VMs.

What K8s is and is not

At this stage you may be thinking that Kubernetes, or K8s for short, is a software platform to allow the grouping of containerized apps, but that’s not quite the case. K8s isn’t about grouping containers and corresponding apps, but orchestrating them once they have been set up (and grouped) within a platform offering this functionality, such as Docker (the most popular), Mesos, CoreOS rkt, and LXC. Once these are set up in a one-to-many relationship type schematic (i.e. Docker configured with multiple containers and apps in the form of a cluster), software like K8s comes to the forefront to manage it all, and make admin tasks less of a burden through automation. In essence, K8s (a Container Manager, CM) is what VMM is to running multiple Virtual Machines – a software management layer that pins it all together, and keeps the cogs turning smoothly.

K8s is all about coordinating and scheduling containers such that they can run optimally and gain access to system resources on the fly as load fluctuates throughout the day. Google is an expert in this field, as it deployed huge (probably the highest) number of servers and apps over the past two decades. Kubernetes’ development and design are highly influenced by Google's Borg system , which was created to manage large-scale clusters. Deployable both on-premises or in the cloud, K8s enables you to run container apps across multiple physical machines, and maintains a fail-safe watch layer for rebooting crashed containers. It also makes it easy to roll out updates including rollbacks, and features auto-scaling for closing and launching containers based on various performance metrics. Last but not least, K8s permits the running of containerized apps in consistent deployments, thus making monitoring them via 3rd party software such as Prometheus, Jaeger, EFK (Elasticsearch, Fluentd, Kibana) stack or cAdvisor far easier. K8s and streamlined CM solution are essentially synonymous.

In the second part of our post - Why your company should use Kubernetes - we’ll present what makes Kubernetes a solution that your business should use to run containerized applications.