In today's fast-paced software development world, continuous integration and continuous delivery (CI/CD) pipelines are critical for organizations to deliver high-quality software efficiently. However, ensuring compliance with security and regulatory policies can be a challenging and time-consuming process. Open Policy Agent (OPA) and Rego, a declarative language for policy enforcement, offer a solution to this problem. By leveraging OPA and Rego together, organizations can automate compliance checks within their CI/CD pipelines, reducing the burden on developers and increasing the efficiency of the development process.

In the previous article, we presented general information about what policy as code is, what tools we can use, and in which context we can talk about that approach. This article will focus on OPA (Open Policy Agent) and the Rego language used to build such policies.

Problem

In order to show how powerful OPA can be when used together with other tools like CI/CD pipeline or Terraform for infrastructure as code, let's suppose we have a problem:

- in an organization there is a convention of tagging resources in the cloud - every created object needs to have at least two tags (Name, Environment),

- all developers are encouraged to deliver frequently and incrementally, not all at once,

- no developer is allowed to create users in IAM.

How can OPA help us resolve this problem?

Solution

As presented in the below diagram, policies can be executed before any changes are applied by Terraform. When all rules defined in OPA are fulfilled, the pipeline is continued and Terraform deploys changes in the environment. In other cases, the pipeline is intermittent and no changes are applied by Terraform.

Using the Rego language, we can implement rules for checking each requirement defined in the problem section. What's more, all rules in the policy are independent from the infrastructure code. We can use the same set of rules for every Terraform code prepared by different teams. We need only remember to execute it just before Terraform deployment.

As the general solution is described, let's implement it using open-source tools, which can be easily done on your local machine. The only requirement is to have Docker Desktop.

Implementation

After installing Docker Desktop on a local machine, let's clone the opa-policies repository . In examples/aws/infra there are prepared files which allow you to set up:

- Localstack, which enables you to run some of the AWS services on a local machine.

- Jenkins, which is going to execute the CI/CD pipeline.

First, let's build a Docker image which is going to contain Jenkins with additional tools like Terraform, Rego, and AWS CLI (details can be found in Dockerfile ):

cd examples/aws/infra

docker build -t jenkins:jcasc .

docker-compose up -d

After that you should be able to access Jenkins by going to http://localhost:8080. The credentials needed to authenticate are available as plain text in the examples/aws/infra/casc.yaml file, so for production usage these steps need to be secured.

The built image for Jenkins contains a preconfigured project with one pipeline, which contains a few stages as described in the solution part. The whole pipeline is defined in Jenkinsfile and there are a few important parts to it:

- generating the Terraform plan and converting it into JSON:

sh 'cd examples/aws/infra && terraform plan --out tfplan.binary'

sh 'cd examples/aws/infra && terraform show -json tfplan.binary > tfplan.json'

- policy analysis with a final result and score:

sh 'opa exec --decision terraform/analysis --bundle examples/aws/policy examples/aws/infra/tfplan.json'

sh 'opa exec --decision terraform/analysis/score --bundle examples/aws/policy examples/aws/infra/tfplan.json'

sh 'opa exec --decision terraform/analysis/allow --bundle examples/aws/policy examples/aws/infra/tfplan.json'

Let's try a pipeline embedded into Jenkins, built by running docker build. For that purpose we have prepared very simple infrastructure code, which is creating one bucket and putting one file into it. Let's remove the Environment tags for both resources from examples/aws/infra/main.tf :

resource "aws_s3_bucket" "localstack_s3_opa_example" {

bucket = "localstack-s3-opa-example"

tags = {

Name = "Locastack bucket"

}

}

resource "aws_s3_object" "data_json" {

bucket = aws_s3_bucket.localstack_s3_opa_example.id

key = "data_json"

source = "files/data.json"

tags = {

Name = "Object in Locastack bucket"

}

}

and commit the changes:

git commit -am "Code without all required tags"

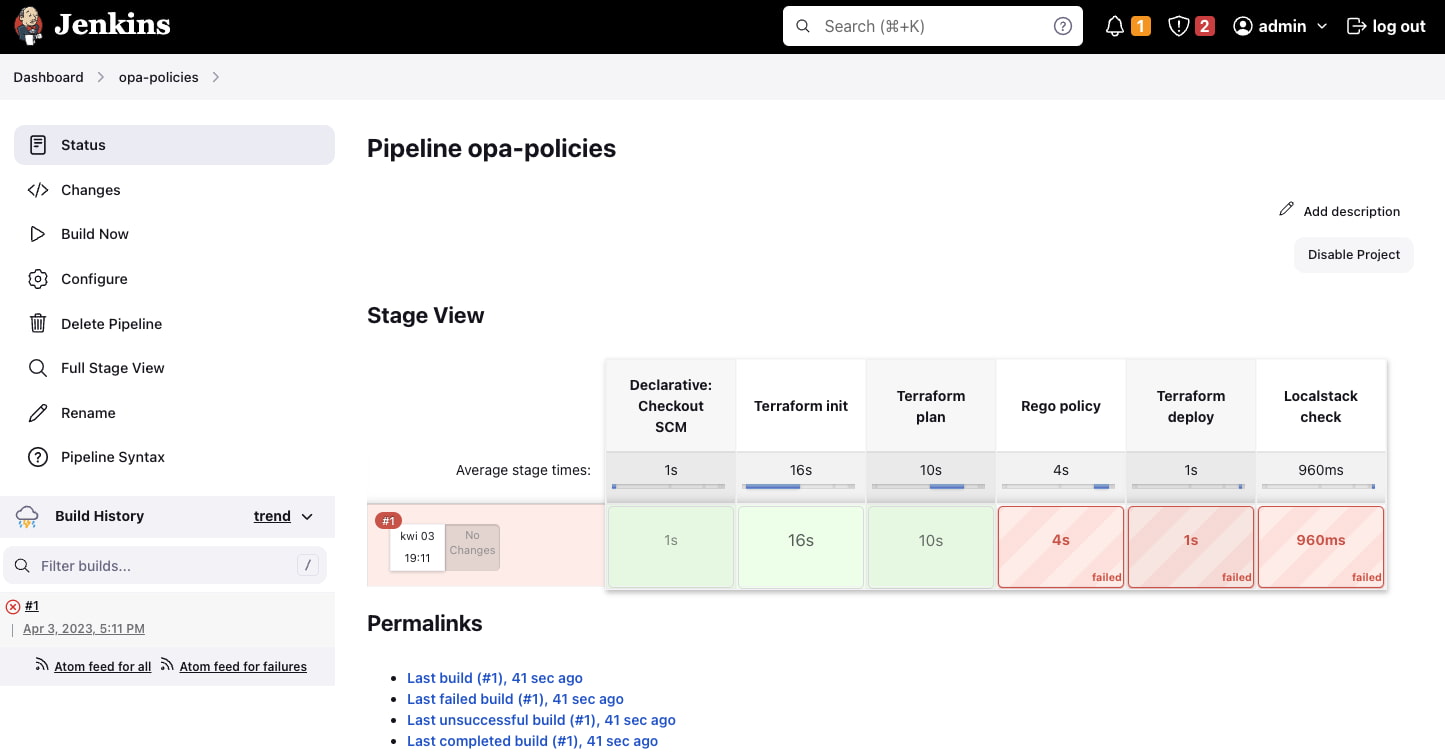

Then let's execute our Jenkins pipeline and check if it fails at the Rego policy stage, as in the below picture:

After checking logs for failed execution, we find false as the result of policy execution:

opa exec --decision terraform/analysis/allow --bundle examples/aws/policy examples/aws/infra/tfplan.json

{

"result": [

{

"path": "examples/aws/infra/tfplan.json",

"result": false

}

]

}

and the score:

opa exec --decision terraform/analysis/score --bundle examples/aws/policy examples/aws/infra/tfplan.json

{

"result": [

{

"path": "examples/aws/infra/tfplan.json",

"result": 11

}

]

}

In this execution there was injected code, for which only one of the requirements (connected with the tags needed for every resource created by Terraform) was not fulfilled. Similarly, we can put other resources, for which the rest of the requirements defined in the problem section aren’t going to be fulfilled, e.g.

- in order to encourage developers to deliver frequently and incrementally, not all at once, there are calculated score variables, which are values connected with the number of changes in the infrastructure - if we define 30 S3 objects in Terraform code, the score will be higher than the defined value,

- in order to disallow creating users in IAM there is a check whether creation of the resource aws_iam_user is planned - if we define that resource in Terraform code, the Rego policy fails too.

The details of the policy used to verify each of the requirements can be found in the examples/aws/policy/terraform_basic.rego file, where the most important part is implemented in the allow section with three expressions (one per each requirement):

allow {

score < blast_radius

not touches_aws_iam_user

all_required_tags

}

Going back to Terraform code - as we checked the failed pipeline, let's add Environment tags for both resources from examples/aws/infra/main.tf :

resource "aws_s3_bucket" "localstack_s3_opa_example" {

bucket = "localstack-s3-opa-example"

tags = {

Name = "Locastack bucket"

Environment = "Dev"

}

}

resource "aws_s3_object" "data_json" {

bucket = aws_s3_bucket.localstack_s3_opa_example.id

key = "data_json"

source = "files/data.json"

tags = {

Name = "Object in Locastack bucket"

Environment = "Dev"

}

}

and commit the changes:

git commit -am "Code wit all required tags"

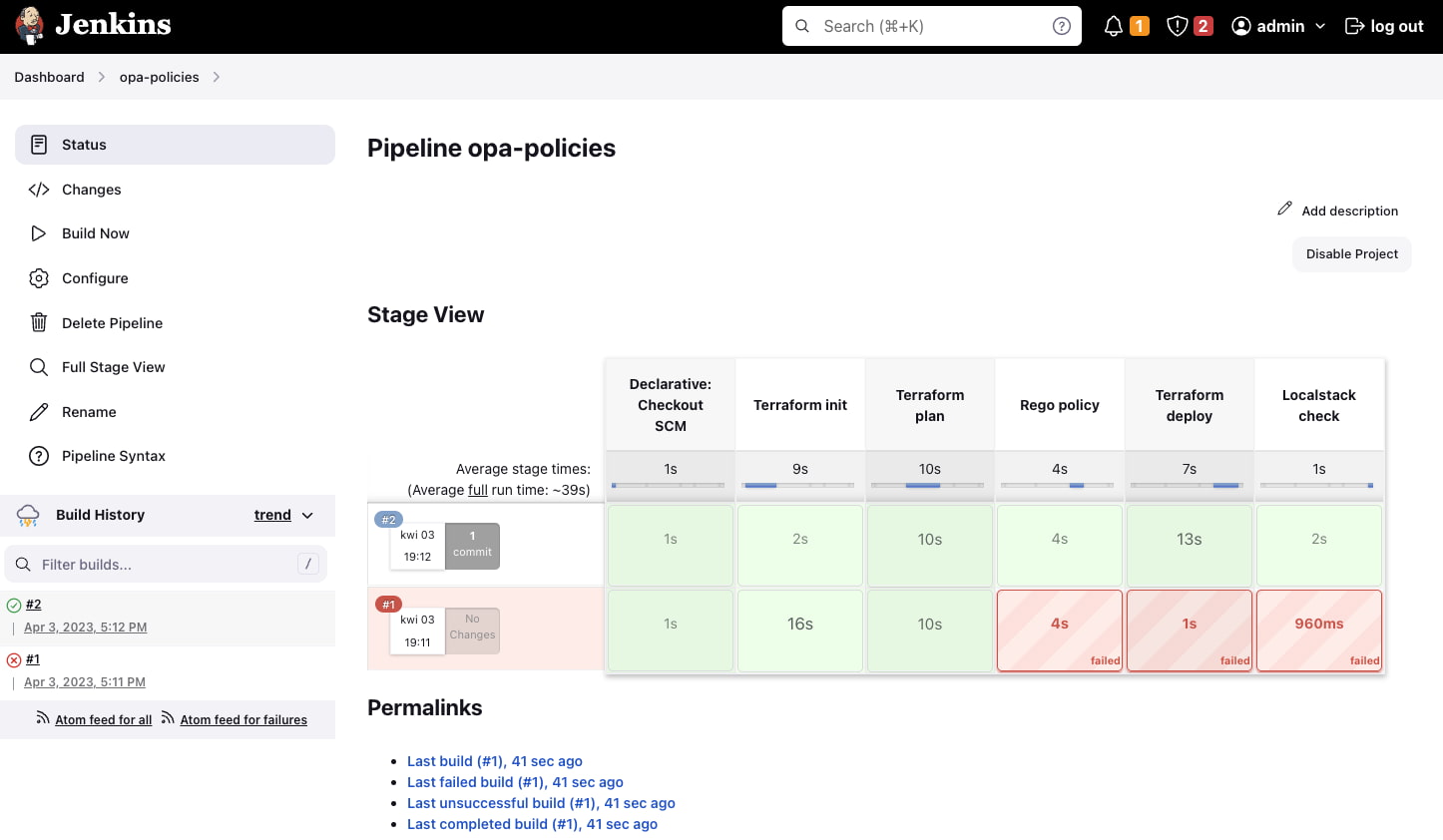

Then let's execute our Jenkins pipeline and check if it has succeeded, as in the below picture:

We can double check our deployment by running AWS CLI:

docker exec -it jenkins bash

# aws --endpoint-url=http://localstack:4566 s3 ls

2023-04-03 17:13:33 localstack-s3-opa-example

# aws --endpoint-url=http://localstack:4566 s3 ls s3://localstack-s3-opa-example

2023-04-03 17:13:33 29520 data_json

Conclusion

OPA enables policies that test the changes Terraform is about to make before it makes them. Such tests help:

- verify compliance with the conventions in the organization (e.g. naming, tagging resources),

- force the implementation of small but frequent changes to the infrastructure (e.g. limit the number of created or updated resources in a single commit),

- block selected changes in the infrastructure (e.g. in IAM).

Such policies are independent from the infrastructure code, they can be applied before the deployment of any Terraform code prepared by different people.

The practical example has shown the power behind OPA, but of course, it's not everything, as the features and possible use cases for Rego significantly exceed the scope presented in this article. Rego can be used with HTTP APIs, Kafka, Kubernetes, and much more.