Cloud-native network functions (CNFs, for short) are a hot topic in network architecture. CNFs use containers as the base for network functions and thus would replace today’s most widely used standard, Virtual Network Functions (VNFs). In such a scenario, a container orchestration platform--Kubernetes, say--could be responsible not only for orchestrating the containers, but also for directing network traffic to proper pods. While this remains an area under research, it has aroused considerable interest among industry leaders. The Cloud Native Computing Foundation (CNCF) and LF Networking (LFN)

have joined forces to launch the Cloud Native Network Functions (CNF) Testbed in order to foster the evolution from VNFs into CNFs. Moreover, Dan Kohn

, Executive Director of the CNCF, believes

that CNFs will be the next big thing in network architecture.

CodiLime’s research agenda

CodiLime’s R&D team is on top of the research on CNFs. We aspire to play an active role in this burgeoning area, and have developed two solutions for simultaneously harvesting the benefits of VNFs and CNFs. Given that these architectures are based on completely different concepts, this is no easy task. This blogpost will draw together these seemingly disparate points by presenting a technical solution that was used to solve a business case that called for the use of VNFs in a CNF environment. This topic was originally presented at the ONF Connect held on 10-13 September in Santa Clara, California. In the follow up to this blog post, to be published in the last week of September, we will show how to use Tungsten Fabric to enable smooth communication between OpenStack and Kubernetes. Originally, this topic will be presented at ONS

, which is being held on 23-25 September in Antwerp, Belgium.

Harnessing the benefits of VNFs and CNFs all together

Let’s assume that you have the following business case. You have been using containers for their lightness and portability. Flexibility and resilience are the qualities you value the most. At the same time, you want to deploy apps in a simple way. You have been using Kubernetes to manage your resources. There remains just one thorny pain point: a business critical network function you use is a blackbox VM image that cannot be containerized. To solve this tricky matter we need to use containers while preserving a vital functionality run on VM.

The advent of virtualized network functions

Before we delve into the details of our solution, I’ll briefly discuss the main features of VNFs and CNFs. The concept of Network Function Virtualization (NFV, for short) was first introduced in 2012 by a group of service providers-- AT&T, BT, Deutsche Telekom, Orange, Telecom Italia, Telefónica and Verizon--in response to a problem they were having with network infrastructure. Back then, their services were based on hardware like firewalls or load balancers provided by specialized hardware companies. Everything was installed and configured manually. New services and network functions often required a hardware upgrade. All that was giving the telcos precious little in the way of flexibility. Their solution was to virtualize some network functions like firewalls, load balancers or intrusion detection devices and run them as Virtual Machines, thus eliminating the need for specific hardware. Thanks to this, new functions could be deployed more quickly, network scalability and agility increased and the use of network resources could be optimized. Good examples of VNFs include virtual Broadband Network Gateway (BNG ) and virtualized Evolved Packet Core (vEPC).

Uncontainerizable VNFs

Yet not every VNF can be moved into a Docker container, for a number of reasons. Sometimes VNF is a blackbox from some external providers (e.g. PAN-OS as firewall). In such cases, it can only be run as it is without any modification or creating derivative works. VNF may also be based on an Operating System other than Linux, or VNF software is provided as an image of a whole hard drive. In other cases, VM-based networking functions do not use external kernels at all, but are constructed as Unikernels

. Such VNFs cannot be containerized and use the common CNF kernel on the host. Some networking functions are already battle tested and prepared as VM images, making it hard to change them. Firewall solution might be based on a kernel other than Linux (PF

on *bsd). VPN or other software can use Linux kernel modules which might not be available for the OS used on worker nodes. There may also be a dependency on older versions of MS SQL Server, MS Exchange or MS Active Directory which are not available as a Docker image. While newer versions of MS SQL Server can run on Linux, some software may still require an older version of this server.

Moving to CNFs

Cloud-native Network Functions (CNFs), on the other hand, are a new way of providing a required network functionality using containers. Here software is distributed as a container image and can be managed using container orchestration tools (e.g. Docker images in Kubernetes environment). Examples of CNFs include:

- Dynamic Host Configuration Protocol (DHCP)

service is using DB service to keep the state in a DB layer

- VPN as userspace software--secure tunnel between different clusters

- Lightweight Directory Access Protocol (LDAP)

service as a source of authorization/authentication for an application, and also for storing its data in a DB layer by using DB service

Business requirements of the solution

Let’s get back to business. We have a VNF that cannot be containerized, but we still want to use CNFs. So we need to create a converged setup for running containerized and VM-based network functions using a single user interface--the same type of definition for both containers and VMs--and controlled by a single API (Kubernetes). Naturally, there are additional requirements to be met:

- The use of a single user interface - the same type of definition for both containers and VMs, and also controlled by a single API (Kubernetes)

- There’s no need to install either Kubernetes on OpenStack or OpenStack on Kubernetes

- There’s no need to manually maintain libvirt installation on top of Kubernetes

- There’s no need to use separate libvirt instance per each VM with custom object definitions for VMs (which KubeVirt

requires)

Technical solution

To achieve this lofty goal in a technically mature way, we did the following:

- Use Kubernetes and leverage its CRI mechanism to run VMs as pods

- Configure nodes to use criproxy/Virtlet

- Describe the VM using a pod definition passing the URL to a qcow2 image in place of the usual docker image for a container

- Run such a pod as a part of Deployment/Replicaset/Daemonset

- Enjoy it equally in the setups of different cloud providers!

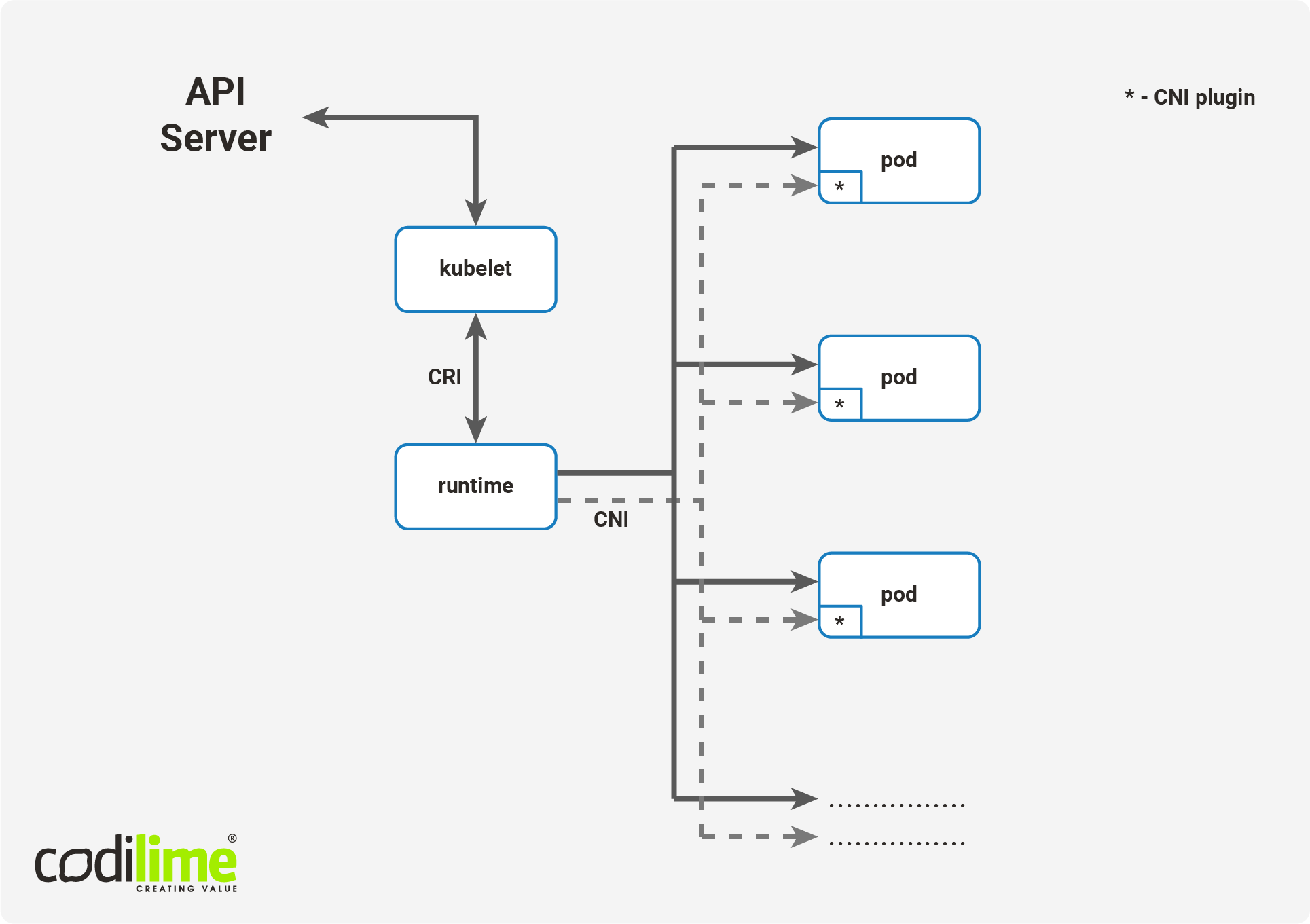

In this technical solution, two elements play a crucial role: Virtlet and the CRI (Container Runtime Interface)

. The CRI mechanism in Kubernetes is a plugin interface enabling the use of many different container runtimes, but without the need to do the recompilation. Virtlet, on the other hand, allows Kubernetes to see VMs as pods, i.e. in the same way that dockershim sees containers. Virtlet is also CRI-native. It has the same way of describing resource limits as for containers--that is, the definition of resource limits for containers and for VMs is identical and expressed by the same part of the yaml file. Virtlet also provides the same network configuration using CNI

(an interface for configuring container networks also used by Kubernetes). From this point we highly recommend using SR-IOV

to ensure the best performance for NFV workloads. SR-IOV runs qcow2-based VM images using a libvirt

toolkit to manage virtualization platforms. There is a single libvirt instance per node. Since it is CRI, all VMs will be seen in k8s as pods, so they can be part of other Kubernetes-native objects like Deployments, ReplicaSets, StatefulSets, etc.

Key findings

It is true that Virtlet requires a great deal of configuration work, but it will pay off thanks to the following advantages:

- The same API handles both VMs and containers (e.g. kubectl logs/attach)

- The resources for VMs and containers are defined in the same way

- VM becomes a native object of Kubernetes and can be a part of DaemonSet, ReplicaSet, Deployment or of any other objects that have native k8s controllers

- Virtlet supports the SR-IOV interface, which ensures the performance and latency levels required for NFV workloads

- SR-IOV makes it possible to connect hardware directly with VMs

- Multiple NICs can be defined using CNI multiplexers like CNI-Genie

. This feature is necessary to ensure that SR-IOV is used for user-facing traffic without conflicting with other network traffic such as intra-pod connectivity