You may have already heard about network telemetry, but you are not sure you understand well enough what exactly is behind it. If that's the case, you'll find the right explanation in this article.

What is network telemetry?

The term "network telemetry" can be understood in different ways. Over the years, it was not completely clear for everyone. Following the RFC 9232 , you will come across the following understanding of the terms "network telemetry" and "telemetry data":

- “Network telemetry is a technology for gaining network insight and facilitating efficient and automated network management. It encompasses various techniques for remote data generation, collection, correlation, and consumption.”

- “Any information that can be extracted from networks (including the data plane, control plane, and management plane) and used to gain visibility or as a basis for actions is considered telemetry data. It includes statistics, event records and logs, snapshots of state, configuration data, etc.”

It is worth noting that the above definitions are very broad. At least two important conclusions can be drawn from them:

-

In general, there is no single preferred technique for network telemetry as such (which, of course, does not mean that some techniques will be better suited than others for specific use cases).

-

Telemetry data is not limited to just one specific type of data. Telemetry data may include data of various kinds, for example:

- configuration and operational parameters’ values (gathered from network elements)

- current values of different sorts of counters (e.g. related to the number of transmitted/received packets, etc.)

- any information related to the current state of the network

- packet samples or mirrored traffic streams

- End-to-End measured values such as unidirectional delays, round-trip-time (RTT), jitter, packet loss, etc.

This approach seems to make a lot of sense because it pushes strictly formal disputes into the background and focuses more on practical aspects.

Network telemetry - solutions characteristics

Existing network telemetry solutions are generally diverse, and it is not always easy to compare them in a straightforward way because different methods or protocols are used for different purposes, or the way they work is fundamentally different. However, there are several different criteria that can help to classify them somehow. Two popular criteria that are usually applied are as follows:

- passive vs. active solutions - In short, active methods are those that generate specific packet streams to measure some metrics in a given network. Passive methods, in turn, are based only on the observation of existing streams. Active methods include ping, TWAMP, traceroute, etc., while passive methods are sFlow or IPFIX, for example.

- pull vs. push working modes - In the pull mode, data is acquired from the network on demand, i.e. in the form of direct queries sent to the network devices. Pushing data, on the other hand, means sending them by network devices in response to the prior subscription of specific data by an external client. This is sometimes called streaming network telemetry. In this model, the client does not have to constantly poll the devices for data. Data updates are sent to the client as soon as they appear. For example, SNMP and NETCONF work in the pull mode natively while the push mode is present for gNMI or when YANG Push is used for NETCONF (or RESTCONF).

Network telemetry - solutions overview

Over the years, many protocols and solutions for network telemetry have appeared. Here are the most representative examples:

- ping - Ping is a popular software diagnosis utility for IP networks available out of the box in many operating systems for PCs and network devices. Ping is used to test the reachability of hosts in the network. It uses ICMP Echo Request and Reply messages underneath. Ping typically reports RTT and packet loss.

- TWAMP (Two-Way Active Measurement Protocol) - TWAMP provides a method for measuring two-way or round-trip metrics. TWAMP measurements may include forward and backward delays, RTT, packet loss, jitter, etc. TWAMP does not rely on ICMP Echo messages. Instead, it uses TCP and UDP.

- traceroute - Traceroute is another software diagnosis tool for IP networking. It displays the entire routing path to the given destination as well as measures transit delays on this path. Depending on the implementation, traceroute can use ICMP, UDP and TCP packets.

- sFlow - sFlow is a legacy technology used to monitor network devices using packet sampling (taking 1 out of every N packets going through the interface in real-time). sFlow’s sampling process is performed directly by the forwarding chip, of course, if it supports sFlow’s standards. The data, i.e. sampled packets combined with other data like interface counters etc., is exported to the remote data collector over UDP for further analysis.

- IPFIX - IPFIX is an open standard based on the Cisco Netflow v9 solution but is also supported by other vendors. Similar to sFlow, IPFIX also exports data to an external data collector, but these are, in most cases, not sampled packets but aggregates of information related to particular flows in the network (a flow is a sequence of packets that matches a certain pattern). In this way, IPFIX allows a much higher precision of monitoring than in the case of simple packet sampling.

- SNMP (Simple Network Management Protocol) - For over thirty years, SNMP has been a widely used protocol for managing and monitoring network devices. The system architecture consists of a central manager (NMS - Network Management System) that communicates with agents installed on devices. SNMP works primarily in pull mode. Push mode is also supported (SNMP Trap), but it has some limitations.

- NETCONF - NETCONF is a protocol for network management and data collection. It uses the YANG standard for data modeling and XML for data encoding. The way NETCONF works is based on the RPC (Remote Procedure Call) paradigm, where network devices act as NETCONF servers and external applications (e.g. managers, controllers) establish sessions with them acting as clients. In the context of telemetry, clients pull the specified data stored in so-called datastores on servers.

- RESTCONF - RESTCONF is generally a similar protocol to NETCONF but uses HTTP.

- YANG Push - YANG Push is a set of standard specifications that allow for adding subscription functionality to NETCONF and RESTCONF. This way, clients can receive a stream of updates from a datastore.

- gNMI (gRPC Network Management Interface) - gNMI is a protocol based on the gRPC framework. As gRPC itself provides for various possible modes of message exchange between server and client, e.g. unidirectional or bidirectional streaming, gNMI natively supports a subscription-based telemetry model. gNMI works with YANG data models.

- INT/IOAM (In-band Network Telemetry/In-situ OAM) - INT and IOAM are twin concepts for so-called in-band network telemetry. This is a specific approach to telemetry, significantly different from those mentioned above. The idea here is to collect telemetry metadata for packets such as ingress/egress timestamps, routing path, latency the packet has experienced, queue occupancy in a given network device the packet visits, etc., and to embed them into packets (as headers, not data) when they traverse their routing paths. This approach offers a very detailed view of what is going on in the network but also brings many issues to address. To learn more, check out the following blog post about Inband network telemetry (INT).

Challenges and requirements

Today, the so-called observability has become one of the most important logical components of many applications and systems. To be able to achieve this, data must be acquired effectively and then processed efficiently. This creates specific challenges also for network telemetry. The most important are as follows:

Fine-grained visibility - telemetry data

It is important to provide applications that monitor the network environment with the appropriate level of insight. Simply, there must be enough data, and the data must be reliable in the sense that they reflect the actual state of the network or the network device at a given moment in time. It is worth realizing that today telemetry data is consumed mainly by machines, less often by humans. This allows for faster response to events and automation of decision-making processes in the broad sense, but it requires precise data. Traditional ways of data collection oriented within a low-frequency sampling approach will not work well with such assumptions. Instead, subscription-based data streaming mechanisms seem to be generally better suited to today's needs.

Limited impact on the network

At the same time, it is important to ensure that telemetry data is not overloaded. The number of different types of metrics that can be obtained from the network can be huge. However, in many cases, specific network monitoring applications do not need to consume all available types of data at a given time, but only certain subsets of them.

That is why it is very important to decide what kind of data is really needed. Otherwise, the very high-volume telemetry traffic can lead to unnecessary network load or even congestion, and it can affect the forwarding performance of devices in the context of user traffic (in cases where there is no appropriate network traffic engineering applied that can deal with such phenomena).

In general, it should be noted that the impact of telemetry data traffic on user traffic should be as small as possible. This applies to both the telemetry data volume (it must be tailored to the needs of monitoring applications but also to network capacity) and the measurement techniques that are applied (interferences with user traffic leading to indirect results should be avoided).

Standardized data models

In many cases, the telemetry data consumed by individual applications will come from many different sources, e.g. different network domains, different network devices, different types of devices (physical and virtual), and different planes within a network device (forwarding plane, control plane, management plane). The obvious challenge for the application here is to properly correlate data in order to draw appropriate conclusions from them. However, no less important issue is data modeling. When telemetry data is properly modeled using standard data models (based on YANG data modeling, for example), it is easier for applications to parse them and then operate on them.

Proper protocols, encoding and transport techniques

It is worth noting that the different planes within a network device have their own specificity. Since the main task of the forwarding plane is primarily very fast packet processing, forwarding chips are optimized for this kind of work. However, it is not their role to perform complex operations on data or support stateful connections. In turn, CPUs (main control CPU or dedicated service card CPUs if they are present) are much better suited for this, around which the functionality of the control plane and management plane is built.

However, they have some limitations related to packet processing speed. If you want to build an optimal telemetry system in terms of its performance, you may want to collect telemetry directly from each plane. In this case, the forwarding plane data will be exported directly by the forwarding chip, and the control plane and management plane data will be exported from the CPUs. For each case, the optimal data encoding format, protocol and transport mechanism should be selected. For example, for the forwarding plane, you may choose IPFIX based on UDP (as one of the transport protocols IPFIX can utilize), and for the management plane (and usually also for the control plane), you would rather use NETCONF, RESTCONF or gRCP that are based on TCP.

A broader perspective

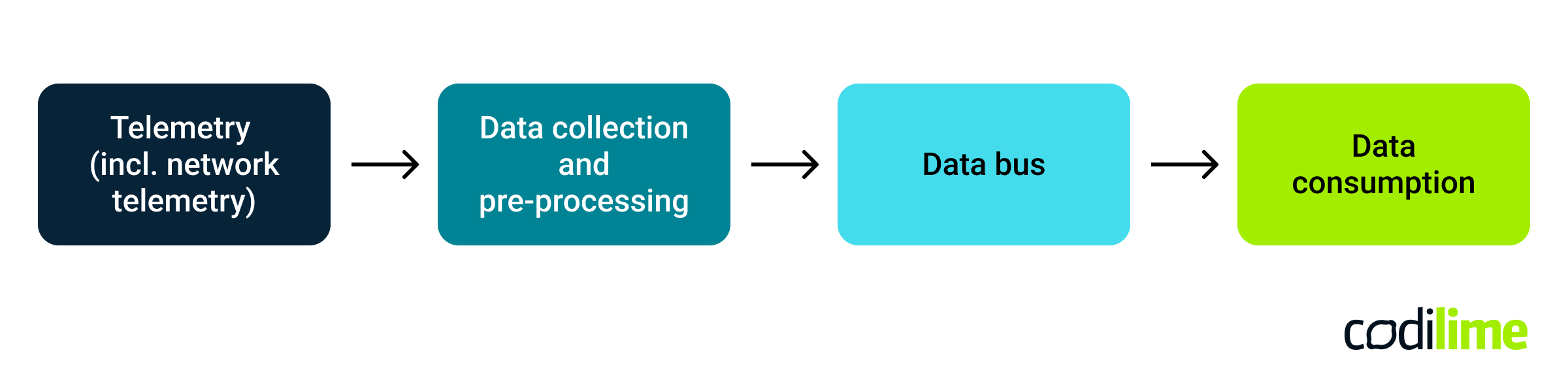

It is good to know that network telemetry is not something isolated. It is usually part of a larger whole, e.g. systems containing many logical components, where network telemetry is just one of them. For example, imagine a data center where bare metal compute nodes are connected to a leaf-spine fabric (quite a typical deployment). You want to build a system that will identify all sorts of issues related to infrastructure (including network) and/or will generate appropriate alerts before they occur to give you time to react accordingly. The high-level logic of such a system can be described as follows (see Fig. 1)

- Telemetry (including network telemetry) - this includes all telemetry mechanisms used at the infrastructure level, both for compute nodes and network devices

- Data collection and pre-processing - a set of agents/clients that receive raw telemetry data and transform it if needed

- Data bus - a distributed data streaming system; it could be Kafka, for example, or some similar solution

- Data consumption - various types of applications that operate on telemetry data to perform visualization, diagnostics, alerting, trending, forecasting, etc., depending on the business logic that has to be implemented in a given system.

Read more about networks:

- Network optimization – what is it and why is it important?

- What is policy based routing (PBR)?

Explore our network professional services to learn how we can support you.

Summary

Network telemetry includes various techniques, protocols, and methodologies. It is quite a broad term. However, it is natural that today's needs in the context of network telemetry are a little different than, say, 20 years ago. The way network functions are implemented has changed (more virtual appliances are present), there are new runtime environments (shift to data centers, clouds), architectural models have evolved, and new protocols, APIs, etc., have appeared. The general trend towards IT approaches, and methodologies have taken place. Therefore, it can be expected that the essence of network telemetry will increasingly move from old classic terms like "network monitoring" towards such terms as observability, fine-grained visibility, network data subscription and consumption, etc.