This article was updated on 7/22/2021

Both CI/CD and the benefits of automating software delivery were widely discussed during the FOSDEM conference held in Brussels on 1-2 February 2020. Our team was in the center of the action and brought back a great deal of news from the CI/CD world. Read on to learn all about it.

What you should keep doing in CI/CD in 2021

Successfully implementing a CI/CD pipeline means measuring—measuring everything. Gathering and monitoring extended statistics allows you to have full control over what is happening in your CI/CD process. It is good to prepare code coverage tests, so that developers know how their code is behaving. Moreover, you should monitor servers to ensure they are working properly.

It is also worth noting that your CI/CD setup should not be limited to handling only one build. Every developer should be able to add a new piece of code while the CI/CD is running. Once it has been merged, the new code should trigger the creation of another build. In other words, your CI/CD setup should enable you to create multiple builds simultaneously.

When creating containers, it is good practice to avoid dependencies. Still, in many cases this is not possible. The more complicated your build, the more of them must be taken into consideration while merging new code. It may sometimes happen that a plugin may have been recently updated and won’t work properly in your new build. To avoid such situations, use dependency management tools, such as Maven, to manage your dependencies like a boss.

Additionally, it is always good to have an ideal CI/CD pipeline to work toward. While in many cases there is no technical or business need that would require all of the stages of such a pipeline, let’s nonetheless enumerate them all here. First, there are the checks that are run to test if an environment with newly merged code runs hitch-free. These are followed by compiling statistics covering every aspect of the continuous integration pipeline. Of course, you also need automated tests, as without them it is virtually impossible to ensure the new code is up to snuff. The fourth element is compiling project code with newly added changes and building it into a deployable version. Artifact testing, to check if artifacts that are the parts of builds work as they were expected to, rounds out the list. If everything goes well, you pack your build and then upload the final product for deployment.

Last but not least, communication is essential. I mean not only communication inside a team, but also across different teams. Communication silos are as harmful, if not more so, than technical ones. To avoid them, give your team a flat structure.

Communication is also crucial for pipelines. It often happens that you build a project with numerous components from different vendors. You must ensure that their components communicate with each other correctly, for example by creating endpoints in the CI/CD pipeline. collect the information that a given stage is finished and the process can proceed.

-> Want to learn more about CI/CD? Check out our other articles:

- Business benefits of CI/CD

- Best CI/CD pipeline tools you should know

- How to set up and optimize a CI/CD pipeline

- CI/CD tools in DevOps – which should you choose?

- Continuous monitoring and observability in CI/CD

What you should start doing in CI/CD in 2021

There are also a number of new developments worth noting in the CI/CD world. First, security tests are becoming more and more important. These include not only standard automated tests checking code for possible bugs, but also checking the code for possible security flaws.

Secondly, version control software vendors want to have the CI/CD pipeline on their own platform. For example, there is GitHub Action—a solution that allows you not only to keep your code in their repository, but also to execute tests on their platform. Similarly, GitLab’s GitLab Runner allows you to run automated tests and streamline your CI/CD process. Better still, it can be based on Kubernetes, a now standard containers-orchestrating technology.

As with other popular terms like DevOps or TestOps, the recently coined GitOps puts two acronyms together: Git, a popular code repository, and Ops, short for Operations. Essentially, GitOps is an approach to implementing Continuous Deployment for cloud-native applications. GitLab’s raison d’etre is to store declarative descriptions of infrastructure in a Git repository, which becomes a single source of truth. An automated process ensures that the production environment is configured exactly as it is described in the repo. When deploying a new application or updating an existing one, you have only to update the description in the repository. The rest will be handled by an automated process.

GitOps offers several appreciable advantages. Now, you can deploy even more often and faster, as you don’t have to switch tools for deploying your applications. Git repository has a complete history of the changes made in your environment over time, making post-crash recoveries fast and easy. Credential management is simplified, as you can manage deployments from inside the environment. Your developers do not need to have direct access to it. The most essential feature, however, is the ability it gives you to add changes to the environment. Every change must be introduced through the repository, so you can always check who introduced it and for what reason. You also have access to the complete history of updates made in your environment from its very inception. If an environment configuration file is stored on the server where everybody has access to it, it may happen that changes are introduced without any approval or justification. GitOps helps you avoid such unpleasant surprises.

Another term gaining more recognition is observability, a process used to gain insight into internal states of a system by checking its external outputs. Much more than monitoring which set of actions you choose to ensure that your software is working properly, observability is a property of the software itself. As such, it is introduced at the software development stage. This means it is developers’ responsibility to include observability in their software.

What deployment strategy you should pick in 2021

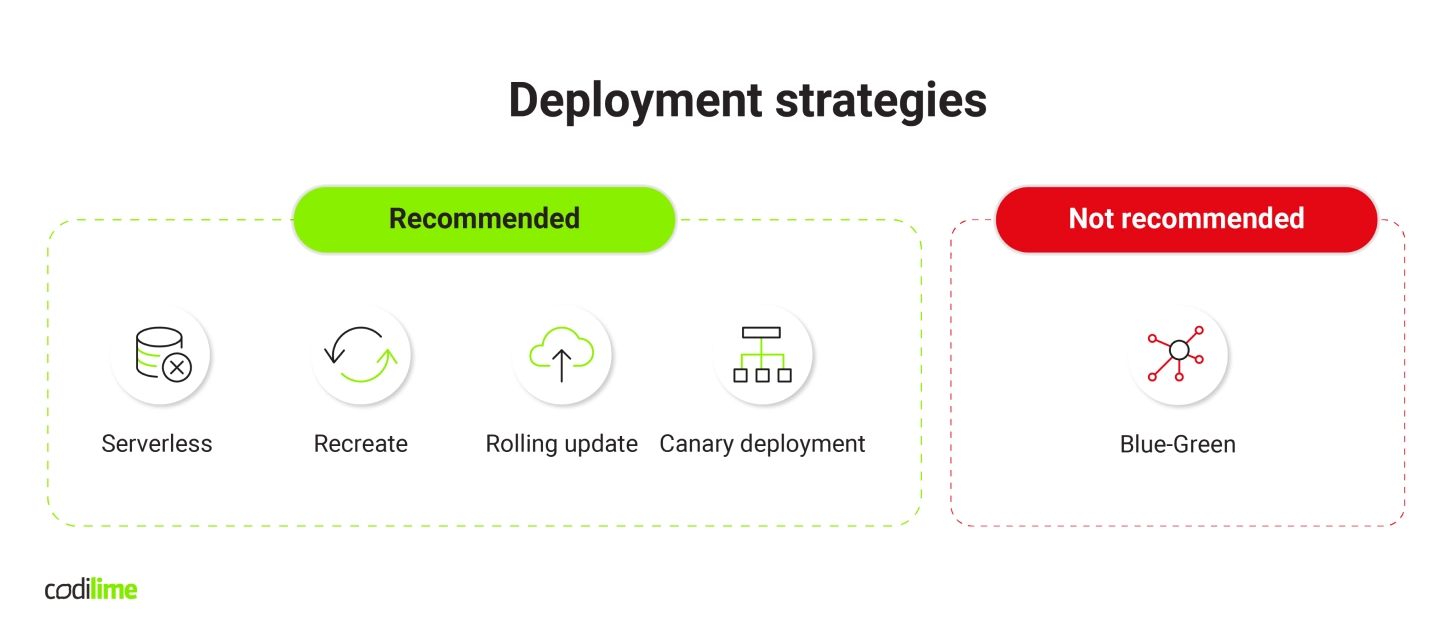

The deployment strategy you choose will affect your architecture, testing, and monitoring. And the other way round, architecture, testing, and monitoring affect the way you deploy. All these elements are tightly linked, so it is crucial to your deployment strategy be adjusted to the application you are going to deploy. I consider these four strategies to be the best: serverless, recreate, rolling update and canary deployment. The fifth one, Blue-Green, has been described for the sake of completeness, but it is not recommended.

Serverless deployment offers very good elasticity, allows you to scale your application up and down depending on the current usage. For example, if you use the Knative platform based on Kubernetes to deploy and manage your apps, it will automatically create as many replicas of your application as there are requests coming from users. If there is no traffic, existing replicas will simply be killed. This is a cost-effective solution, as you pay only for actual usage. Additionally, it offers high availability of extra computing resources. This strategy is good when the scalability is a key factor or app usage fluctuates considerably.

The recreate strategy is pretty simple: you kill at once all instances of version A and then at once deploy version B, which replaces version A. This technique results in service downtime, the length of which depends on two factors: how long it will take to shut down the older version and how long to launch a newer one. Because of this downtime, this scenario is recommended for legacy non-cloud-native applications that cannot be scaled and are stateful without replication.

The next deployment scenario is a rolling update. It starts when an old application replica is killed and replaced by a new version, with the traffic going to both versions. The process gradually continues until all old versions are replaced by new ones. It offers high availability and responsiveness, as there is no service downtime, but it is not as cost-effective as serverless strategy. Additionally, an update cannot be rolled back in case of a failure, so this scenario is recommended for cloud-native applications where, for whatever reason, you cannot use canary deployment.

Canary deployment scenario begins when a new release is deployed and a portion of the traffic is redirected to it. The rest of the traffic still goes to older versions. The metrics on this traffic going to a new version is collected and sent to a database where it is analyzed. If no problem is detected, the percentage of the traffic that goes to the canary version is increased and the traffic going to the older version is decreased. As soon as all traffic is redirected to the canary version, the older versions are updated to the new release and the canary version is shut down. The traffic is reconfigured to go to these updated versions. The main advantage offered by this strategy is that you can roll back changes if an error is detected. Although it is not a cost-effective solution, it is recommended to be used in place of rolling updates when you need extra control over the entire update process and the ability to roll back changes.

The last deployment strategy is Blue-Green, which pales in comparison to the previous four deployment techniques, and so is better avoided. Basically, it consists in running two identical production environments called Blue and Green. An update is rolled out and tested on the Green one, to which all traffic is switched while the Blue one is disabled. This strategy requires you to maintain a disabled environment for future releases, which generates additional costs (see Figure 1).

Why progressive delivery techniques are gaining ever more fans

Progressive deployment is becoming more and more popular in the business community. Canary deployment, rolling deployment and blue green (which is very costly and therefore not recommended) are three basic progressive deployment techniques. Such deployment describes the process of not releasing a new build everywhere, but first testing how it works on a limited number of servers. In this way, only a limited number of users is affected. It also allows you to analyze the traffic in this partial deployment, gather metrics and analyze them. If something went wrong, the build should be automatically returned to a previous version.

This approach offers several appreciable advantages. Firstly, should any problem occur, you avoid downtime, as changes are then rolled back automatically. Secondly, the blast radius is limited, as only some of your app users will be directly affected by changes. Monitoring deployment metrics allows you to detect any problem immediately and react automatically before the user notices that something is not working. You should know that the problem exists before your users inform you about it. In this way monitoring becomes new testing. When deploying a new release, it is important to gather as many metrics as possible to know exactly when users are experiencing issues in production. The ultimate goal of such an approach is to make users happy with your application. Additionally, choosing this strategy allows you to shorten your ideas’ time to market. If you can easily test them in a real production environment, you can faster assess whether they are working or not.

To ensure that you have a good amount of metrics, you’ll need to use appropriate tools. To collect them in a Kubernetes setup, you can use Istio. The collected data is then sent to Prometheus, a monitoring and alerting tool. If you’re using canary deployment, you can consider Flagger, a K8s operator that automates its promotion using a data feed from Istio and Prometheus with Grafana for analyzing them.

Continuous Delivery Foundation to foster the development of CI/CD tools

Given the importance of CI/CD in IT, and the growing demand for open-source CI/CD tools, it is no wonder a dedicated organisation has been created by the user community—Continuous Delivery Foundation (CDF) . Working under the umbrella of the Linux Foundation, CDF promotes the development of vendor-neutral projects for CI/CD. It hosts such projects as Jenkins

, an open-source automation server that helps automate non-human tasks in the software development process, Jenkins X

, a tool to automate pipelines with a built-in GitOps feature and with preview environments, and Tekton

, a Kubernetes-native open-source framework enabling the creation of CI/CD pipelines.

CI/CD process in 2021

As you can see, there is no end to the fascinating topics surrounding CI/CD, a field in full digital bloom. This makes us believe that 2021 will be a colorful year with many more new developments.